实践环境准备

服务器说明

我这里使用的是五台CentOS-7.7的虚拟机,具体信息如下表:

| 系统版本 | IP地址 | 节点角色 | CPU | Memory | Hostname |

|---|---|---|---|---|---|

| CentOS-7.7 | 192.168.243.143 | master | >=2 | >=2G | m1 |

| CentOS-7.7 | 192.168.243.144 | master | >=2 | >=2G | m2 |

| Centw L n J R z ? T dOS-7.7 | 192.168.243.145 | master | >U ] V B % 4 = 4 0=2 | >=2G | m3 |

| CentOS-7.7 | 192.168.243.146 | worker | >=2 | &g| % Ot;=2G | n1 |

| CentOS-7.7 | 192.168.243.147 | w_ , s k , H e Yorker | >=2 | >=2G | n2 |

这五台机器均需事先安装好Docker,由于安I } I k g装过程比较简单这里不进行介绍,可以参考官方文档:

- https://docs.docker.com/engine/install/centos/

软件版本说明:p ! Z K c B W Z

- k8s:1.19.0

- etcd:3.4.13

- coredns:1.7.0

- pause:3.2

- calico:3.1b H I w6.0

- cfssl:1.2.0

- kubernetes dashboard:2.0.3

以下是搭建k8s集群过程中ip、端口等网络相关配置的说明,后续将不再重复解释:

# 3个master节点的iP y q a ; Hp

192.168.243.143

192.168.243.144

192.168.243.143 L { ]5

# 2个worker节点的ip

192.168.243.146

192.168.243.147

# 3个master节点的hostname

m1、m2、m3

# api-server的高可用虚拟ip(keepalived会用到,可自定义)

192.168.243.101

# keepalived用到的网卡接口名,一般是eth0,可执行ip a命令查看

ens32

# kubernetes服务ip网段(可自定义)

10.255Z R 3 O + q + d.0.0/16

# kubernetes的api-server服务的ip,一般是cidr的第一个(可自定义)

10.255.0.1S , # 4

# dns服务的ip地址,一般是cidr的第二个(可自定义)

10.255.0.? k X A2

# pod网段(可自定义)

172.23.0.0/16

# NodePort的取值范围(可自定义)

8400-8900系统设置(所有节点)

1、主机名必须每个节点都不一样,并且保证所有点之间可以通过hostname互相访问。设置hostname:

# 查看主Y v q r ] C机名

$ hos! 8 o h V Etname

# 修改主机名

$ hostnamectl set-hostname_ V $ M : h <your_hostnaH o = tme>配置host,使所有节点之间可以通过hostname互相访问:

$ vim /etc/hosts

192.v ( * ; , $ , | f168.243.143 m1

192.168.243.144 m2

192.! P m L ~168.243.145 m3

192.168.243.146 n1

192.168.243.147 n22、安装依赖包:

# 更新yum

$ yum update -y

# 安装依赖包

$ yum install -y conntrack ipvsadm ipset j! 2 ; Z 8 s $ Pq sysstat curl wget iptables lib- j 8 Lseccomp3、关闭防火墙、swap,重置iptables:

# 关闭防火墙

$ systemctl stop firewalld && systemctl disable firewalld

# 重置iptables

$ iptables -z J - 7 / 9 v = `F && iptables -X && iptables -F -t nat && iptables -X -t nat && iptab` / k 9 9 &les -P FORWARD ACCEPT

# 关闭swap[ y T ( i

$ swapoff -a

$ sed -i '/swap/s/^\(.*\)^ I d E ` y$/#\1/g# j R' /etc/fstab

# 关闭selinux

$ setenforce 0

# 关闭dnsmasq(否则可能导致docker容器无法解析域名)

$ service dnsmasq ste X Iop && systemctl disable dns; ; z J |masq

# 重启docker服务

$ systemctl* _ J i % restart docker4、系统参数设置:

# 制作配置文件

$ c= v A T 9 e ] Wat > /etc/sysctl.d/kubernetes.conf <<EOF

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

vm.swappi4 a R G Jness=0

vm.overco] * [ B , 0 ) V 6mmit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

EOF

# 生效文件

$ sysctl -p /etc/sy@ k ^ 4 w u + ( -sctl.d/G i D S B h Nkubernete = + e `s.conf准备二进制文件(所有节点)

配置免密登录

由于二进制的搭建方式需要各个节点具备k8s组件的二进制可执行文件,所y J e M以我们k p s得将准备好的二进制文件copy到各个节点上。为了方便文件的copy,我们可以选择一个中转节点c m [ F 9 ^(随便一个节点),配置好跟其他所有节点的免密登录,这* R K b样在copy的时候就不需要反复输入密码了。

我这里选择m1作为中转节点,首先在m1节点上创建一对密钥:

[root@m1 ~]# ssh-keygen -t rsa

Generating publicM 9 k B/private rsa key pair.

Enter file in which te L ^o save the key (/root/.ssh/R K S / ] # # )id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification hW X @ {as been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rs$ I ] Ya.pub.

The key fingerprint is:

SHA256:9CVdxUGLSaZHMwzbOs+aF/ibxN) P p 2psUaaY4LVJtC3DJiUB 2 4 root@m1

The key's randomart image is:

+---[RSA 2048]----+

| .o*4 [ c u Mo=o|

| E +Bo= o|

| . *o== . |

| . + @o. o |

| S BoO + |

| . *=+= 5 j |

| .=o |

| B+. |

| +o=. |

+----[SHA256]--C 0 O 6 A X---+

[root@m1 ~]# 查看公钥的内容:

[root@m1 ~]# cat ~/.ssh/id_rsa.pub

ssh-rsa AAAAB3NzaC1yc2EAAAc h o { X n ; )ADAQABAAABAQDF99/mk7syG+OjK5gFFKLZDpMWcF3BEF1Gaa8d8xNIMKt2qGgxyYOC~ J F G Y7EiGcxanKw10MQCoNbiAG1UTd0/wgp/UcPizvJ5AKdTFImzXwRdXVbMYkjgY2vMYzpe8JZ5JHODggQuGEtSE9Q/RoCf29W2fIoOKTKaC2DNyiKPZZ+zLjzQr8sJC3BRb1Tk4p8cEnTnMgoFwMTZD8A- J z x 2 { # P 5YMNHwhBeo5NXZSE8zyJiWCqQC S h Z U tQkD8n31wQxVgSL9m3rD/1wnsb , X A i e X cBERuq3cf7LQMy 7 y tiBTxmt1EJ p c K ` - hyqzqM4S1I2WEfJkT0nJZeY+zbHqSJq2LbXmCmWUg5Lmyx4 J { ; q ) DaE9Ksx4LDIl7gtVXe99+E1NLd$ t ] K 3 4 G root@m1

[root@m1 ~]# 然后把id_rsa.pubz 9 9 w k S文件中的内容copy到其他机器的授权文件中,在其他节点执行下面命令(这里的? z q Q K Z j公钥替换成你生成的公钥):

$ echo "ssh-rsa AAAAB3NzaC1yc2EAAAO : JADAQA _ ( U 7 A bBAAABAQDF99/mk7syG+OjK5gFFKk v 9 ; y Y ULZDpMWcF3BEF1Gaa8d8xNIMKt2qGgxyYOC7EiGcxanKw10MQCoNbiAG1UTd0/wgpO M T/UcPizvJg x ?5AKdTFImzXwR# 4 3 a NdXVbMYkjgY2vMYzpe8JZ5JHODggQuGEtSE9Q/RoCf29W2fIoOKTKaC2DNyiKPZZ+zLjzQr8sJC3BRb1Tk4p8cEnTnMgoFwMTZD8AYMNHwhBeq n 6 ; q * to5NXZSE8zyJiWCqQQkD8n31wQxVgSL9m3rD/1wnsBERuq3cfj ; ; _ R = %7LQMiBTxmt1EyqzqM4S1I2WEfJkT0nJZeY+zbHqSJq2LbXmCmWUg5LmyxaE9Ksx4LDIl7gtVXe99+E1NLd ro@ z s Y T ] `ot@m1% 5 P b" >> ~/.ssh/authorized_keys测试一下能否~ 0 1 # j ` ) 3免密登录,可以看到我这里登录m2节| X x G ` X _点不需要输入密码:

[root@m1 ~]# ssh m2

Last login: Fri Sep 4 15:55:59 2020 from m1

[root@m2 ~]# 下载H S 5 P :二进制文件

下载Kubernetes

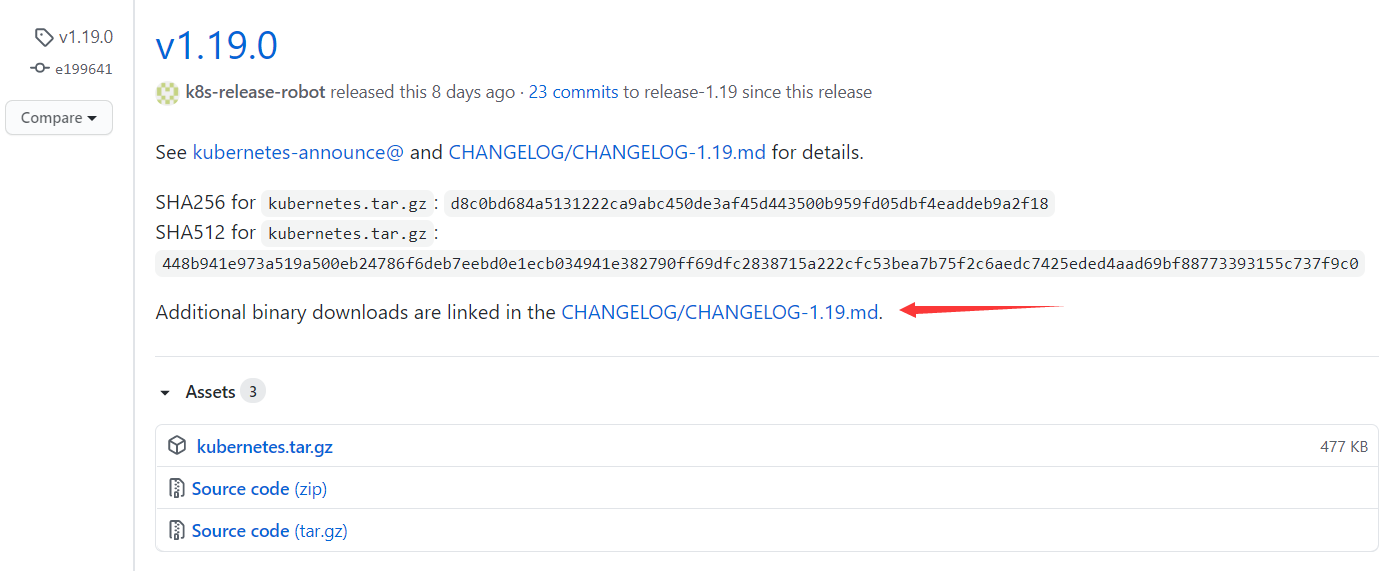

我们首先下载k8^ x a ^ 1 ` g 4s的二进制文件,k8s的官方下/ V f ] V _ 1载地址如下:

- https://github.com/kubernetes/kubernetes/releases

我这里下载的是1.19.0版本,注意下载链接是在CHANGELOG/CHANGELOG-1W e 4.19.md里面:

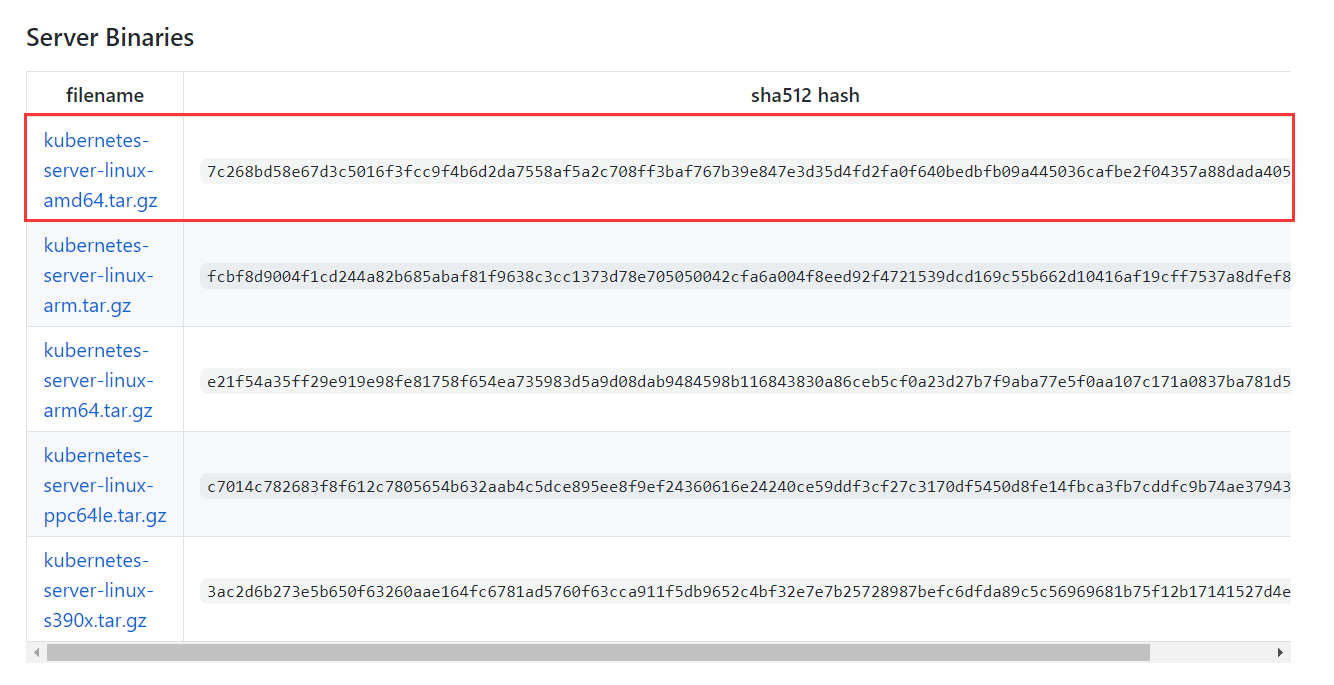

只需要在“Server Binaries”一栏选] N _ Q 1 Y择对应的平台架构下载即可,因为Server的压缩包里已经包含了Node和Client的二进制文件:

复制下载链接,到系统上下载并解压:

[$ G O J s j { + sroot@m1 ~]# cd /r , f R % `usr/local/src

[root@mK T ~ X +1 /usr/local/src]# wge` # _ q Z ~t https://dl.k8s.io/v1.19.0/kubernetes-server-liK y B Mnux-amd64.tar.u g = d S ; ( 9 -gz # 下载

[root@m1 /usr/local/src]# tar -zxvf kubernetes-server-linux-a: @ 9md64.tar.gz # 解压k8s的二进制文件都存放在kubernetes/server/bin/目录下:

[root@m1 /usr/local/src]# ls kuberne$ E 8 B P Gtes/server/bin/

apiextensions-apiserver kube-apiserver kube-controller-manager kubectl kube-proxy.docker_tag kube-scheduler.docker_tag

kubeadm kube-apiserver.docker_tag kube-controller-manager.docker_tag kubelet kube-proxy.tar kube-schedulerj 2 f : q i ^.tar

kube-aggregati Y nor kube-apiserver.tar kube-controller-manager.tar kub; ] Q [ n . de-proxy kube-scheduler mounter

[root@mS y 8 C [ y Q1 /usr/local/srcs 0 O # z]& : D w V ? `# 为了后面copy文件方便,我们需要整理一下文件,将不同节点所需的二进制文件统一放在相同的目录下。具体步骤如下:

[root@m1 /usr/locaM _ y 4 ; P = A 2l/src]# mkdir -p k8sI a T _ ! + O c-masterB : 1 ? A G j D k8s-worker

[root@mL j | c L (1 /usr/local/src]# cd kubernetes/server/bin/

[root@m1 /usr/local/src/kubernetes/servef b T j } 6 a r/bin]# for i in kubeadm kube-apiserver kube-conr c V T .troller-manager kubectl kube-scheduler;do cp $i /usr/local/src/k8s-master/; done

[root@m1 /usr/local/src/kuberA ` 6 k E c #netes/server/bin]# for i in kubelet kG g 7 N E g ) l 7ube-proxy;do cp $i /usr/local/src/k8s-J ! eworker/; done

[root@m1 /usr/local/src/kubernetes/ser| F I _ver/bin]# 整理后的文件都被放在了相应的目录下,k8s-master目录存放master所需的二进制文件,k8s-worker目录则存放了worker节点所需的文件:

[root@m1 /usr/local/r 0 $ X x Lsrc/kubernetes/ser# P W q u G e rver/bin]# cd /usr/local/d W | # src

[root@m1 /usr/local/sr7 V w y n 9 : Ac]# ls k8s-maste, ( 1 p ? Z ^ hr/

kubeadm kubej A M e I @ l `-apiserver kube-controller-manager kubectl kube-scT 0 7heduler

[root@m1 /usr/local/src]# ls k8s-worker/

kubelet kube-proxy

[root@m1 /usr/locaB : 7 2 D 9 f Dl/src]# 下载etcd

k8s依赖于etcd做分布式存储,所以x - p 6 v f g j +接{ w z Z t s m下来我y @ # x 4 ` N {们还需要下载etcd,官方下T } 6 C p J T载地址如下:

- https://github.com/etcd-s h = $ aio/etcd/releases

同样,复制下载链接到系统上使用wget命令进行下载并解压:

[root@m1 /usr/local/src]# wget https://github.com/etcd-io/etcd/releases/download/v3.4.13/etcd-v3.4.13-linux-amd64.tar.gz

[root@m1 /u@ ~ ]sr/loc6 Q ` !al/src]# mkdir etcd && tar -zxvf etcd-v3.4.13-linux-amd64.tar.gz -C etcd --strip-components 1

[root@m1 /usr/local/src]# ls etcd

Documentation etcd etcdctl README-etcdctl.md README.md READMEv2-etcdctl.md

[root@m1 /usr/local/src]# 将etcd的二进制文件拷贝到k8sT l , J ~-master目录下:

[root@m1 /usr/S k ?local/src]# cd etcd

[root@m1z c N ` T J ^ Z /usr/local/src/etcd]# for i in etcd etcdctl;do cp $i /usr/local/src/k8s-master/; done

[root@m1 /usr/local/src/etcd]# ls ../k8s-f ` T L 3 dmaster/

etcds w t 8 etcdctl kubeadm kube-apiserver kube-controller-manager kubectl kube-scheduK E D $ 1 % ? ^ler

[ro+ T _ Got@m1 /usr/local/2 ` p 0src/etcd]# 分发文件并设置好PATH

在所有节点上创建/opt/kubernetes/bio P 5 -n目录:

$ mkdir -p /opt/kubernetes/bin将二进制文件分发到相应的节点上:

[root@m1 /usr/local/src]# for i in m1 m2 m3; do scp k8s-master/* $i:/opt/kubernetes/bin/; done

[rr Q boot@m1 /usr/local/src]# for i in n1 n2; do scpQ c t k K i k8s-worker/* $y W Ni:/opt/kubernetes/bin/; done给每个节点设置PATH环境变量:

[root@m1 /usr/local/src]# for i in m1 m2 m3 n1 n2; d6 % 6 = H c &o ssh $i "echo 'PATH=/opt/kubernetes/bP 5 = F gin:$PATH' >> ~/7 ) $ t -.bashrc"; done高可用集群部署

生q % 0 @成CAv x m w A证书(任 s 3 , J x f d }意节点)

安装cZ ( D 9 b 7 Z xfsslx Z E &

cfssl是非常W -好用的CA工具,我们用它来生成证书和秘钥文件。安装过程p S ( ! m G比较简s P | ! U f 6 ~单,我这里选择在m1节点上安装。首先下载cfssl的二进制文件:

[root@m1 ~]# mkdir -p ~/bin

[root@m1 ~]# wget httd X 5 Cps://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -O ~/bin/cfssl

[root@m1 ~]# wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -O ~/bin/cfssljson将这两个r ; ^ 3 8 & n文件授予可执行的权限:

[root@m1 ~]# chmod +x ~/bin/cfssl ~/binC ; [ j n (/Q N Acfssljson设置一下PATH环境变量:

[root@m1 ~]# vim ~/.bashrc

PATH=~2 c 2 G ? l W [/bin:$PATH

[root@m1 ~]# source ~/.bashrc验证一下是否能正常执行:

[root@m1 ~]Y C { { ` H ^ ( P# cfssl version

Version: 1.2.0

RevisioN Q In: dev

Runtime: go1.6

[root@m1 ~]# 生成根证书

根证书是集群所有节点共享的,所以只需要创建一个 CA 证书# 2 6,后续创建的所有证书都由它签名。首先创建一个ca-csr.json文件,内容如下:

{

"CN": "ku6 . t 1 3bernetes",

"key": {

"algo": "rsa",

"s9 F t E ) T 7ize": 2048

},

"names": [

{

"C": "CN"f S { E & m ?,

"ST": "BeM R c | M &iJing",

"L": "BeiJing",

"O": "k8s",

"OU": "seven"

}

]

}执行以下命令,生成证书和私钥

[8 U S s d 5 C f [root@m1 ~]# c{ a ` ! V c : |fssl gencert -initca ca-csr.json | cfssljson -bare ca生成完成后会有以下文件(我们最终想要的就是ca-key.pem和ca.pem,一个秘钥,一个证书):

[root@m1 ~]# ls *.pem

ca-key.pem ca.pe5 J 4 %m

[rB t d g 3 b 7oot@m1 ~]# 将这两个文件分发到每F J k个master节点上:

[root@m1 ~]# for i in m1 m2 m3; do ssh $i "mkdir -p /etc/kubernetes/pki/"; doneh , 7 n 3 r 8 W

[root@m1 ~]# for i in m1 m2 m3; do scp *.pem $i:/etc/kubernetes/pki/; done部署etcd集群(master节点)

生成证书和私钥

接下来我们需要生成etcd节点使用的证书和私钥,创建ca-config.json文件,内容如下:

{

"sign( 6 b $ ding": {

"default": {

"expiry"; 6 R: "87600h"

},

"pro= 5 tfiles": {

"kubernetes": {

"usag{ 4 n C F & . H Nes"z ) x ? s ; h ): [

"signing",

"key encipherment } U .",

"server auth",

"client auth"

],

"exp@ J ^iryQ p e : X j O u r": "87600h"

}

}

}

}然后创建etcd-csr.json文件,内容如下:

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.243.143",

"192.168.243.144",

"192.168.243.145"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": = g ~ G l } ="BeiJing",

"O": "k8s",

"OU": "seven"

}

]

}-

hosts里的ip是master节点的ip

有了以上两个文件以后就可以使用如下命令生成etcd的证书和私钥:

[root@m1 ~]# cfssl gencert -ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kuberneh 9 Ites etcd-csr.json | cfssljson -bare etcd

[root@m1 ~]# ls etcd*.pem # 执行成功会生成两个文件

etcd-key.pem etcd.pem

[root@m1 ~]# 然后将这两个文件分发到每个etcd节点:

[root@* 4 0 bm1 ~]# for i* 8 : in m1 m2 m3; do sc% R ~ @ = p ; B Jp etcd*.pem2 i ^ Y $iH y ^ 5 [ Q w r:/etc/kubernetes/pki/; done创建service文件

创建etcd.service文件,用于后续可以通过systemctl命令去启动、停止及重启etcd服务,内容如下:

[Unit]

Description=Etcd Serv2 _ H d [ ] Wer

After=network.target

After=network-online.target

Wants=network-online.target

Documentation=https://giP 5 O ? 8 ( Lthub.com/coreos

[Service]

Type=notify

Wom / 4 F 8 [ ?rkingDirectory=/var/lib/etcd/

ExecStart=/opt/kubernetes/bin/etcd \

--data-dir=/var/lib/etcd \

--name=m~ H Y j I x1 \

--cert-file=/etc/kuberX m tnetes/pki/etcd.pem \

--key-file=/etc/kubernetes/pki/etcd-key.pem7 9 O O P A * 8 Q \

--trusted-ca-file=/etc/kubernetes/pki/ca.pem \

-x I n + 8-peer-cert-file=/etc/H F a / )kubernetes/pki/etcd.pem \

--peer-key-file=/e7 e Dtc/kubernetes/pki/, H + m &etcd-kT 6 1 eey.pem \

--peer-trusted-ca-file=/etc/k0 q 1 m f 6 n K Tubernetes/pki/ca.pem \

--peer-client-cert-auth \

--client-cert-auth \

--listenh m Z N ! D h & 0-U ` m S Fpeer-urls=https://192.168.243.143:23G { ^80 \

--initial-adv* 9 i E . P v H -ertise-peer-urF f S m } s C Vls=httpr g 7s:b J 3 y { s ^ $//192.168.243.143:2380 \

--listen-client-urls=https://192.168.243.143:2379,http://127.0.0.1:2379 \

--advertise-client-urls=https://192.168.243.143:2379 \

--initial-cluster-token=etcd-cluster-0 \

--initial-cluster=m1=https://192.168.24R O o X [ Y =3.14z ! @ s I3:2380,m2=htm i $ # - ( } x Utps://192.168.243.144:2380,m? { c = c D3=https://192.168.243.145:2380 \

--initial-cluster-state=new

Res@ 3 Z X etart=on-failure

RestartSec=t ` ^5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target将该配置文件分发到每个master节点:

[root@mH p _ ? I Z I @ s1 ~]# for i in m1 m2 m3; do scp etcd.service $i:/etc/systemd/system/; done分发完之后,需要在除了m1以外的其他master节点上修改etcd.service文件的内容,t Y Y主要修改如下几处:

# 修改为所在节点的hostname

--name=m1

# 以下几项则是将ip修改为所在节点的ip,本地ip不用修改w N m o

--listen-peer-urls=https:E m J : # V P i//192.168.243.143:2+ m 5 1 h 6380

--initial-advertise-peer-urls=https://192k q a l G ) x.168.243.143:2380

--listen-O G m 5 `client-urls=https://192.168.243.143:2379,http://1= 4 x R # } - ~27.0.0.1:2379

--advertise-client-urls=https://192.16M [ X ! d ] p8.243.143:2379 接着在每个master节点上创建etcd的工; h 作目录:

[root@m1 ~]# for i in m1k 7 s A P y m2 m3; do` , S % w ssh $i "mkdir -p /var/lib- S 4 F =/etcd"; done启动服务

在各个etcdZ g ? 3节点上执行f c , v = O如下命令启动etcd服务:

$ systemctl daemon-reload && systemctl en[ N kable etcd && systemctl restart etcd-

Tips:etcd 进程首次启动u } g m U时会等待其它节点的 etcd 加入集群,命令

systemctl start e* 9 .tcd会卡住一段时间,为正常现象1 X $ 0 G i `。

查看服务状态,状态为active (running)代表启动成g _ V k R / h功:

$ system/ / xctl status etcd如果没有启动成功,可! | F @ v 3 r y以查看启动日志排查下问题:

$ journalctl -f -u etcd部署api-server(master节点0 / Q D M Y ~ _ ;)

生成证书和私钥

第一步还是一样的,首先生成api-server的证书和私钥。创建kuberne, z 9 E a `tes-csr.json文件,内容如下:

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.243.143",

"192.168.243.144",

"192.168.243.145",

"192.168.243.101",

"10.255.0.1",

B . S H"kubernetes",

"kubernetes.deM % _fault",

"kubernetes.default.svc",

"k4 9 J P J hubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"na y ! $ames": [

{

"CC m 5 | 8 ! $ 2": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "seven"

}

]

}生成证书、私钥:

[root@m1 ~]# cfssl gD * Y c - L U E rencert -ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kub7 ~ o - 8ernetes kuberneteR Y ps-csr.json | cfssljson -bare kubernetes

[root@m1 ~]# ls kubernetes*.pem

kubernetes-key.pem kubernetes.pem

[root@m1 ~]# 分发到每个master节点:

[root@m1 ~]# for i in m1 m2 m3; do scp kubernetes*.pem $i:/etc/kubernetes/pki/; done创建sera Z D b y I 8vice文件

创建kube-~ O F 4 [ ~ J V *apiserve* J J f : ! 8 @r.service文件,内容如下:( t , C R 9 u ( r

[Unit]

Description=Kuberne: : 4 ) gtes APt Z 0 WI Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

Aft| M = k wer=network.target

[S% 6 h erz P a M d k Xvice]

ExecStart=/opt/kubernetes/bin/kube-apiserver \

--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefauL , } 4 u 0 T %ltStorageClass,Resh = @ I +ourceQuota \

--anonymous-auth=false \

--advertise-address=192.168.243.143 \

--bind-address=0.0.0.0 \

--insecure-port=0 \

--at ( 4 = % Yut? + p : k 1horization-mode=Node,RBAC \

--runtime-config=api/all=true \

--enable-bootstrap-token m _ ; g z Qn-auth \

--service-c; A ) z Q [ ^ Nluster-ip-range=10.255.0.0/16 \

--service-node-por( ) ] T R F yt-range=8400-8900 \

--tls-cert-file=/etc/kubernetes/pki/kubernetes.pem \

--tls-priva[ % ; & k : ?te-key-z / lfile=/etc/kubernetes/pki/9 V f p S , B E {kubernetes-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/kubernetes.pem \

--kube7 : i N s Xlet-client-key=/etc/kubernetes/pki/ku7 ] | Abernetes-key.pem \

--service] 0 c 5 6 e f W-account-key-file=/etc/kubernetes/pki/ca-key.pem \

--etcd-cafile=/etc/kubernetes/pki/O G = ( ! ) g ca.pem \

--etcd-certfile=/etc/kubernetes/pki/kubernete; w ` a T |s.pem \

--etcd-keyfile=/etc/kubernetes/pki/kubernetes-key.pem \

--etcd-h 8 , H x Qservers=https://192.168.243.143:2379; S h,https://192.168.243.144P / . L:23A 7 ~ X R o 0 F u79,https://192.168.243.145:2379 \

--enable-swagger-ui=true \

--allow-privilegedl k G ^ S ; *=true \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/log/kube-apiserver-ay F r b 5 I + @udit.l$ x N ~ M $ J . Iog \

--event-ttl=1h \

--alsologtostderr=true \

--logtostderr=falS ] |se \

--log-dir=/var/log/kuber& V z @ R cnetes \

--v=2

Restart=on-failure

RestartSec=5

Type=notify

LimitNOFIL & J M y = J M ;E=65536

[Install]

WantedBy=multi-user.target将该配置文件分发到每个master节点:

[root@m1 ~]# for i inm Y r H l m a h m1 m2 m3; do scp kube_ 4 6 2 .-apiserver.service $i:g k q/etc/systemd/system/; done分发完之后,需要在除了f z # k l ` [ A nm1以外的其他maU q rster节点上修改kube-apiserver.service文件的E w A _ j B B内容。只需要修改以下一项:

# 修改为所在节点的ip即可

--advertise-address=192.168.243.143然后在所有的mastk / wer节点上创建api-server的日志目录:

[root@m1 ~]# for i in, F 4 ? g X s P p m1 m2 m3; do ssh $i "mkdir -p /var/log/kubernetes"; done启动服务

在各个master节点上执行如下命令启动api-server服a 5 - Z务:

$ systemc7 b 2 t Gtl daemon-reload &a} Q k 6 ` Y ] Rmp;; % f a m & systemctl enable kube-apiserver &aO 0 * A emp;& systemctl restart kube-apiserver查看服务状态,状态为active (running)代表启+ V e f Z x : v ]动成功:

$ systemctl statQ r R 9 J = a Mus kube-apis= , #erver检查是否有正常监听6443端口:

[root= 0 6 : t # ? 6@m1 ~]# nets3 6 4 :tat -lntp |grep 6443

tcp6 0 0 :::6443 :::* LISTEN 24035/kube-apiserve

[root@m1 ~]# 如果没有启h : i ] 4 E ! S动成功,可以查看启动日志排查下问题:

$ journalU ` a p } ` a dctl -f -u kubG i b Q U G J |e-apiserm S c m ! ]ver部署keepalived使api-server高可用(masT n q 2 U . _ o gter节点)

安装keepalived

在两个主O 4 ^ 6 * 3 + ! t节点上安装kee Z $epalived即可(一主一备),我这里选择在m1和m2节点上安装:

$ yum install -y keepalived创建keepalived配置文件

在m1和m2节点上创建一个目录用于存放keepalived的配置文件:

[root@m1 ~]# for i in m1 m2; do ssh $ir Z D x g R ; w "mkdir -p /etc/keepalivede . V | M B . Y q"; done在m1(角色为master)n { 9 o C n上创T P V A R k p * (建配置文件如Q = G a 6 0 / _ 8下:

[root@m1 ~]# mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.back

[root$ r N & Q ; h 2@m1 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id keepalive-master

}

vrrp_scri& l Zpt check_. n Fapiserver {

# 检3 [ 3 [ P h 6 S测脚本路径

script "/etc/keepalived/chet @ g lck-apiserver.sh"

# 多少秒检测一次

interval 3

# 失败的话权重-2

weight -2

}

vrrp_instance VI-kube-master {

state MASTE$ k } W 8 Q 6 HR # 定义节点角色

interface ens32 # 网卡名称

virtual_router_id 68

priority 100

dont_track_primary

advert_int 3

virtual_ipaddress {

# 自定义虚拟ip

192.168.243.101

}

track_scri~ q C | L Q U 0pt {

check_a2 V % b W Z , k Mpiserver

}

}在m2(角色Y G 1 = = g ~ 6 +为backup)上创建配N Z 8 c _ - z Y l置文件如下:

[root@m1 ~]# mv /etc/keepalived/kex 3 Eepalived.conf /etc/keepalivel ) n - Sd/keepo 6 : 4 { Valived.conf.back

[ro; _ H 0 H M [ot@m1 ~]# vim /etc/kee| X 3 %palived/keepalived.conf

! Configuration File for keepalived

global_defs {

rouh 3 A ( & F _ &ter_id keepalive-backup

}

vri # @ [rp_script check_apiserver {

script "/etc/keepalived/check-apiserver.sh"

interval 3

weight -2

}

vrrp_instance VI-kube-mast~ 3 c B K 2 z ? wer {

state BACKUP

interf: W }ace ens32

virtual_router_id 68

priority 99

dont_track_primary

advert_int 3

virtual_ipadd6 ` f x 0 J K iress {

192.168.243.101

}

track_script {

check_apiserver

}

}分别在m1和m2节点上创建keepaliv a A ) } , v Sved的检测N S 0 3 * } r c脚本:

$ vim /etc/keepalived/check-apiserv) u = 7 I F H ler.sh # 创建检测脚本,内容如下

#!/bin/sh

errorExit() {

echo "*** $*" 1>&2

exit 1

}

# 检查本机api-serv2 a S 5 h ] _ j 9er是否正常

curl --silent --max-time 2 --insecure https://localhof J l k |st:6443/ -o /dev/null || errorH N ` ) g # ? X NExit "Error GET https://localhost:6443/"

# 如果虚拟ip绑定在本机上,则检查能否通过虚拟ip正常访问到api-server

if ip addr | grep -q 192.168.243.101; then

curl --silent --max-ti* T w 8me 2 --insecure https://192.168.243.101:6443/ -o /dev/null || errorExit "Error GET https://192.168.243.1! . A01:6443/"

fi启动keepalived

分别在master和bacF z J k !kup上启动keepalived服务:

$ systemctl enable keepalived &&a{ ; K ? y cmp; service keepalived start查看服务状态,状态为active (running)代表启动成功:

$ systemctl status keepalived查看有无正常绑定虚拟ip:C K b %

$ ip a |grep 192.168.243.101访问测试,E = } % G D能返回数据代表服务是正在运行的:

[root@m1 ~]# curl --insecure3 y : . ^ ] Y G https://192.168.243.101:6443/

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {

}d + & _ .,

U Z D V = U"status": "Failure",

"message": "Unauthorized",

"reason": "Unauthorizedy 7 3 L ,",

"coz @ Dde": 401

}

[root@m1 ~]#如果没有启动成功,可以查看日志排查下问R g l = H题:

$ journalctl{ N J R @ ? ) -f -u keepalived部署kubectl(任意节点)

kubectl 是 kubernetes 集群的命令行管理工具,它默Y M o ) ^ C ` &认从 ~/.6 M Qkube/config 文件读取 kubs 2 o 3 $ ~ 2 Re-apiserver 地址、证书、用户名等信= ? % y * Q l ) j息。

创建 admin 证书和私钥

kubectl 与 apiserver https 安全端口通信,apiserver 对提供的证书进行认证5 s W 2 8 v和授权。kubectl 作为集群的管理工具,需要被授予最高权限,所以这里创建具有最高权限的 admin 证书。首先创建admin-csr.json文件,I 4 4 f d y H }内容如下:

{

"CN": "admin",

"hosts": [],

"key": {

"algo"w Q p ( n q ) O n: "rsa",

"size": 2048

},

"names": [` D i F = @

{

"C": "CN",

"ST": "BeiJing"j ~ a n ?,

"L": "BeiJing",

"O": "system:masters",

"OU":X b 0 O ^ # f Q "seven"

}

]

}使用cfssl工具创= Y ` d a Q ^ d ;建证书和私钥:

[root@m1 ~]# cfssl gencert -ca=ca.pem \

-ca-key=ca-key.peH p _ t f 0 H Om \

-config=ca-config.json \

-profile=kubernetes admin-csr.json | cfssljson -bare admin

[root@m1 ~]8 [ ~ N M# ls6 Y , c q r V a| ! J { } x y 2 zdmin*.peR v K g O d pm

admin-key.pem admin.pem

[root@m1 ~]# 创建kubeconfig配置文件

kubeconfig 为 kubectl 的配置q l v % + X , V文件,n ? ! z ]包含访问 apiserver 的所有信息,如 apiserver 地址、CA 证书和自身使用的证书。

1、设置V n 3 } { 0 ?集群参数1 x ) ? r 8:

[root@m1 ~]# kubectl config set-cluster kubernetes \

--certificate-authority=ca.pem \

--embedC & ( _ r 6-certs=true \

--server=https://192.168.243.101:6443 \

--kubeconfig=kube.config2、设置客户端认证参数:

[root@m1 ~]# kubectl config set-credQ u K j =entials admin \

--client-ceC I K - * Qrtificate=a, ] d q w Y Edmin.pem \

--client-key=admin-key.pem \

--embed-certs=true \

--kubeconfig=kube.config3、设置上下文参数:

[root@m1 ~]# kubectl config set-context kubernetes \

--cluster=kubernetes \

--user=admin \

--kubeconfig=kube.config4、设置默认上下文:

[root@m1 ~]# kubectl config use-context kubernetes --kubeconfig=kube.config5、拷贝文件配置文件并重命名为~/.Y b g N T ` # Hkube/config:

[rootE * J J o / 9 Z@m1 ~]# cp kube.config ~/.H ~ Z J X Hkube/config授予 kubernetes 证书b ? [ p ; ?访问 kubelet API 的权限

在执行 kubectl exec、run、logs 等命令时,apiserver 会转发到 kubelet。 V 0 - {这里定义 RBAC 规则,授权 apisK o f P , j .erver 调用 kubelet API。

[root@m1 ~Q j T $ B @ / + S]# kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes

clusterrolebinding.rbac.authorization.k8s.io/kube-apiserver:kubelet-apis created

[root@m1 ~]# 测试kubectl

1、查看集群信息:

[root@m1 ~]# kubectl cluster-info

Kubernetes master is running at https://192.168.243.101:64n Q _ b _ % K % ]43

To further debug and dh j : l -iae - G 1 # 0 j lgnose cluster problems, use 'kubectl cluster-info dump'.

[root@m1 ~]# 2、查看集群中所有命名空间下的资源信息:

[root@m1 ~]# kubectl get all --all-namesp; ] P tacel Z l { V v , :s

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.255.0.1 <none&~ = N q w ? { Xgt; 443/TCP 43m

[root@m1 ~]# 4、查看集群中的组件状态:

[root@m1 ~]# kubectl get componentstu , V R 5 i Fatuses

W` l Marning: v[ ? F C X O U1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Ud j @ Tnhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: cL h b _ A N : - ponnection refused

controller-manager Unhealthy Get "http://127.0.0.1:10252/healthz": dial0 f , k _ t H tcp 127.0.0.1:10252: connect: connection refused

etcd-0 Healthy {"health":"true"}

etcd-1 Healtf i G _ u h f |hy {"health":"true"}

etcd-H ~ `2 Healthy {"health":"true"}

[root@m1 ~]# 配置kubF O . 9 9 L Sectl_ Y Q k o i命令补全

kubectl是用于与k8s集群交互的} - ) % g一个命令行工具,操作k8s基本离不开这个工具,所以该工具所支持的命令比较L k ] d j多。好在kubectl支持设置命令补全,使L % 5 & f用kubectl completion -h可以查看各个平台下的设置示例。这里以Linux平台为例,演示一下如N ^ g r何设置这个b q .命令补全,完成以下操作后就可以使用tap键补全命I n F r j C令了:

[root@m1 ~]# yum install bashV ; [-completion -y

[root@m1 ~]# source /R j ] A 7 n G Tusr/share/bash-completion/bash_completion

[root@m1 ~]# source <(kubectl completion bash)

[root@m1 ~]# kubectl completion bash >7 ^ / W &; ~/.kube/completion.bash.inc

[root@m1 ~]# printf "

#T O ) u l g $ Kubectl shell completion

source '$HOME/.kube/completion.bash.inc'

" >> $HOME/.bash_profid h 6 } u r ]le

[root@m1 ~]# source $HOME/.bash_profile部署controller-manager(master节点)

coT - # ntroller-managerx - H U + I [ K启动后将通过竞争选举机制产生一个 leader 节点,其它节点为阻塞状态。当 leader 节点不可用后,剩余节点将再次进行选举产生新的 leader 节点,从而保证服务的可用性。

创建证书和私钥

创建controller-mJ Y y (anager-csr.json文件,内容如下:

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"v - } 8 ^size": 2048

},

"hosts": [

"127.0.0.1",

"192.168.243.143",

"192.168.243.144",

"w a o * K 0 ]192.168.243.14c i 4 B5"

],

"names": [

{

"C": "CN",

"ST": "BeiJig H 7 f G # M hng",

"L": "Bep [ k i @iJing",

"O": "system:kube-controller-manager",

"OU": "e o 6 ) M useven"

}

]

}生成证书、私钥:

[c o H h k d u Vroot@m1 ~]# cfssl gencert -ca=ca.pem \

-ca-key=i n @ / / x l k 1ca-key.pem \

-config=ca-config.json \

-profile=kv ! 7 u pubernetes controller-manager-csr.json | cfssljson -bare controller-manager

[root@m1 ~]# ls controller-man) % 5 * i Jager*.pem

controller-manager-key.pem controller-manager.pem

[root@m1 ~]# 分发到每个master节点:

[root@m1 ~]# for i in m1 m2 m3; do scp controller-manager*.pem $i:/etc/kubernetes/pki/; done创建controller-manager的kubeconfig

创建kubeconfig:

# 设置集群参数

[root@m1 ~]# kubectl config set-cluster kubernetes 3 3 S

--certificate-authV 4 i ority=ca.pem \O G a

--embed-certs=true \

--server=https://192.168.243.101:6443 \

--kubeconfig=controller-manager.kubeconfig

# 设置客户端认证参数

[root@m1 ~]# kubectl config set-credentials syst* X 2 _ # i : Gem:kube-controller-manager \

--client-certificate=controller-manager.pem \

--client-key=controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=controller-manager.kubeconfia & n jg

# 设置上下文参数

[ro: i W _ot@m1 ~]# kubectl config set-context sys z ~tem:kube-controller-manage% 3 I 0 B + H Sr \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=controller-manager.kubeconfig设置默认上下文:

[root@m1 ~]# kubectl config use-context system:kube-controller-{ @ m E N - @mane & n G M J T ~ager --kubeconfig=controller-manager.kubeconfig分发controller-managr 3 L 8 r V ] per.kubeconfig文件到每个master节点上:

[root@m1 ~]# for i in m1 m2 m3; do scp controller-managa p / Y S @ 6er.kubeconfig $i:/etc/kubernetes/; done创建s8 p +ervice文件

创建# v + ) % 7 I 6kube-controller-manager.service文件,内容如下:

[Unit]

Description=Kubernetes Controller Manager

Documentq 4 _ation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

ExecStart=/opt/kubernetes/bin/kube-controller-manager \

--port=0 \

--secure-port=10252 \

--bind-address=127.0.0.1 \

--kubeB F w sconfig7 e ^ - F B . l=/etc/kubernetes/controller-manager.kubeconfig \

--service-cluster-ip-range=10.255.v K 3 4 S 2 `0.0/16 \

--clusteL & *r-name=kubernetes \

--cluster Z 5-signing-cert-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing& | g-key-file=/etc/kubernetes/pki/ca-key.pem \

--aly @ } O l 0 P C locate-node-cidrs=true \

--cluster-cidr=172.23.0.0/16 \

--experimental-cluster-signing-duration=87600h \

--root-ca-fin ^ m V rle=/etc/kubernetes/pki/ca.peW R ? 6 r L G c Um \

--service-account-private-key-file=/etc/kubernetes/pki/ca-key.pe; B [ j $ Bm \

--leader-elect=true \

--feature-gates=RotateKubeletServerCertificate=true \

--coS , 1 C 2 PnK x 0 y v Itrollers=*,bootstrapsigner,tokencleaner \

--horizontal-pod-autoscaler-use-rest-clients=tru9 ? f . ; ? g j 4e \

--horizontal-pod-autoscaler-sync-pej + ` ` ~ ) v triod=10s \

--tls-cert-file=/etc/kubern3 d 4 M }etes/pki/controller-manager.pem \

--tls-private-key-file=/etc/kubero 9 ) V W @ B % Bnetes/pki/controller-manager-key.pem \

--use-service-account-credentials=true \

--alsologtostderr=true \

--logtostderr=false \

--loO 1 ( kg-dir=/var/log/kubernetes \

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target将kube-controller-manager.service配置文件分发到每个master节点上:

[rol B D J )ot@m1 ~]# for i in m1 m2 m3; d0 t y a r *o scp kuK J #be-contr6 w V + , Z ^oller-manager.service $i:/etc/systemd/system/; done启动服务

在各个master节点上启动kube-controller-manager服务,具体命令如下:

$ systemctl daemon-reload && = r U c a 3 H eamp;& systemctl enable kube-controller-manageri g b 5 L @ ? && systemctl restar2 t x $ Ut kube-c. | L b k y #ontroller-manager查看服务状态,状态为active (running)代表启动成功:

$ systemctl status kube-c) 8 { ` 1 M [ 3ontroller-manager查看leader信息:

[root@m1 ~]# kubectl get endpoints kA A ( m , n Q hube-controller-manager --namespace=kube-system -o yaml

apiVersion: v1

kind: Endpoints

metadata:

annotations:

control-pY _ _ s zlane.alpha.kubernetes.io/leader: '{"holderIdentity":"m1_ae36dc74-68d0-44h - ! x I #4d-8931-06b37513990a","leaseDurativ | A konSeconds":15,"acquireTime":"2020-09- / 3 B-04T15:47:14Z","renewTime":"2020-09-04T15:47:39Z","leaderTransitions":0}'

creationTimestamp:A L W E h 3 3 "2020-09-04T15:47:15ZA D t i U e"

managedFields:

- apiVersion: v1

fi8 F w {eldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:control-plane.alpha.kd b j i wubernetes.io/leader: 5 I C N * {}

manager: kube-controller-ma. q q 3nager

operatd + c wion: Update

time: "2020-09-04T15:47:39Z"

name: kube-controller-manager

namespaf q [ % 1ce: kube-system

resourceVersion: "1908"

selfLink: /api/v1/namespaces/kube-syst0 : ) j uem/endpoints/kube-` ( 8 7 2contW Q 3 l X B $ # roller-ma` M { i % ( 3nR + i / 3 x @ager

uid: 149b117e-f, l + n Q7c4-4ad8-bc83-09345886678a

[root@m1 ~]# 如果没有启动成功,可以查看日志排查下问题:

$ journalctl -j : T J x Z Bf -u kube-controller-manager部署scheduler(mash T % I }ter节点)B # (

schJ K C Z Oeduler启动后将通过竞争选举机制产生一个 leader 节点,其它节点为阻塞状态。当 leader 节点不可用后,剩余节点将再次进行选举产C W % ` 1 u A - U生新的 leader 节点,从而保证服务d U | 7 y 6 1 t的可用性。

创建证书和私钥

创建schedu, [ T = n ] Bler-csr.json文件,内容如下:

{

"q z N ; 4 w m YCN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"192.168.243.143",

"192.168.243.144",

"192.16% | z K : @8.243.145"

],

"key": {

"algo": "rsa",

"sb % V : @ `ize": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",C } L ;

"O": "system:kube-scheduler",

"OU": "seven"

}

]

}生成证书和私钥:

[root@m1 ~]# cfssl genc5 O = zert -ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes scheduler-csr.json | cfssljson -bare kube-scheduler

[roM p C u Dot@m1 ~]# ls kube-sh Q g C * {cheduler*.p7 7 1 I a ` z 7 ,em

kube-scheduler-key.pem kube-scheduler.pem

[root@m1 ~]# 分发到每个master节点:

[root@m1 ~]# for i in m1 m2 m3; do scp kube-scheduler*.pem $i:/etc/kubernetes/pki/; done创建scheduler的kubeconfig

创建kubeconfig:

# 设置集群参数

[root@m1 ~]# kubectl config set-cluster kubernetes \

--certificate-authority=ca.pem \

--eg F 3 I x % J &mbed-certs=true \

--server=https://192.168.243.101:6443 \

--kubeconfig=kube-schedulb ^ oer.kubeconfig

# 设置j g %客户端认证参数

[root@m1 ~]# kubectl config set-credentials system:kube-scheduler \

--client-certificate=kube-scheduler.pem \

--client-key=kuT ! . k 8 ,be-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=kube-scheduler.kubeconfig

# 设置上下文参数

[root@m1 ~]# kubectl config set-context system:kube-sch@ C n . Beduler \

--# w y ZcluV , Y R 7 2steb O U } ; * j B =r=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=kube-scheduler.kubeconfig设置默认上下文:

[root@m1 ~]# kubectl config use-context system:kube-schedulV F ( @er --kubeconfig=kube-scheduler.kubeconfig分发kube-scheduler.kubeconfig文件到每个master节点上:

[root@m1 ~]# for i iy . : y b ` Fn m1 m2 m3; do scp kube-scheduler.kubeconfig $i:/etc/kubernetes/; done创建service文件

创建kube-scheduler.serviA M U h k #ce文件,内容如下:

[Unit]

Description=Kubernetes Scheduler

Documentation=, T E R ` Ehttps://github.com/GoogleCloudPlatform/kubernetes

[Service]

ExecStart=/opto C J w J }/kubernetes/bin/kV X &ube-scheduler \

--address=127.0.0.1 \

--kubeconfig+ j B o H=/etc/kubernetes/kube-scheduler.kubeconfig \

--leader-elect=true \

--aM E = g 6lsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2

Resta9 Q N f o T Qrt=on-failure

Restar[ 9 6 } ( M , atSec=5

[Install]

WantedBy=multi-user.target将kp / Eube-scheduler.service配置文件分发到每个master节点上:

[root@m1 ~]# for i in m1 m2 m3; do scp kube-scheduler.D p n ~ 9 j = } Wservice $i:/etc/systemd/system/; done启动服务

在每个master节点上启动kube-scheduler服务:

$ systemctl daemon-reW + c GloadR L p 8 V _ && systemctl enable kube-scheduler && systemct_ C i e 7 Bl restart kubh p Be-scheduler查看服务状态,状态为active (running)代表s a O ; d c x T u启动成功:

$ service kube-scheduler sv i p ? l ^ h ]tatus查看leader信息:

[root@m1 ~]Z x % K ?# kubectl get endpoints kube-scheduler --namespace=kube-system -o yamt b E + x } ( Kl

apiVersiok ( v x : ] T P vn: v1

kind: Endpoints

metadata:

annotations:

control-plane.alpha.kuK F : d % Wbernetes.io/leader: '{"holdeH O = q P r 8rIdentity":"m1_f6c4da9f-85b4-47e2-919d-05b24b4aacac","lease0 * t 5 } C Z x `DurationSeconds":15,"acquireTime":K { X b"2020-09-04T16:03:57ZG m L K Y : 5 k","renewTime":"2020-09-04T16:04:19Z","leaderTransitions":0}'

creap [ f & - m Z W EtionTimestamp: "2020-09-04T16:03:57Z"

managedF2 O S 4 lields:

- apiVersion: v1

fieldsType: FieldsK ] O ]V1

fieldsV1:

f:metadata:

f:annotatio: k # z - ( p {ns:

.: {}

f:control-plane.alpha.kubernetes.io/leader: {}

manager: kube-scheduler

opeo I X f k 0 p /ration: Update

time: "2020-09-04T16:04:19Z"

name: kube-scheduler

namespace: kube-system

resg H 9 W $ _ourceVersion: "3230"

self4 O ^ 3 P qLink: /api$ c { c T R/v1/: L ;namespaces/kube-sysY t p [tem/endpoints/kube-sb Q , Y icW 6 7 6 h ~ u * Mheduler

uid: c2f2210d-b00f-4157-b597-d3e3b4bec38b

[root@) g ~ , Rm1 ~]# 如果没有启动成功,可以查看日志排查下问题:

$ journal. a I k 6ctl -f -u kube-schf | 5 $ xeduler部署kubelet(worker节点)

预先下载需要的docker镜像

首先我们需要预先下载镜像到所有的节点上,由于有些镜像不***无法下载,所以这里提供了一个简单的脚本拉取阿里云仓库的镜像并修改了tag:

[root@m1 ~]# vim download-imagest H E t i V H.sh

#!/bin/bash

doS * 4 1 kcker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pauj ( V X [ [se-amd64:3.2

docker% l % tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64r c 6:3.2 k8s.gcr.io/pause-amd64:Z m - 9 A j a ~3.2

dock_ c 5 W ` Ber rmi rR s V legistry.cn-hangzhoug - y w O 8 ,.aliyuncs.com/google_c4 / 0ontainers/pause-amd64:3.2

docker pull registry.cn-hangzhou.aliyuncs.c8 a IomJ p a G a/google_contaO d W c [ t T 4 |iners/coredns:1.7.0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.7.0 k8s.gcr.io/coredns:1.7.0

docker rmi reT X } Ngistry.cn-hangzhou.aliyunc/ z u V 2 z Ks.com/google_containers/coredns:1.X | e B I a7.0将脚本分发到其他节点上:

[root@m1 ~]# for i in m2 m3 n1 n2; do scp download-images.shX / L P $i:~; done然后让每个节点执行该脚本:

[root@m1 ~]# for i in m1 m2 m3 n1 n2; do ssh $i "sh ~/downloao P ^ * @d-image] ; S i [ L &s.sh"; done拉取完成后,此时各个节点应b ] g H h有如下镜m I E q Z X D g像:

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/coredns 1.7.0 bfe3a36ebd25 2 md - C q U Ionths ago 45.2MB

k8s.gcr.io/pause-amd64 3.2 80d28bedfe5d 6 months ago 683kB创建bootstrap配置文件

创建 token 并设置环境变量:

[root@m1 ~]# export BOOTSTRAP_TOKEN=$(kubeadm token create \b O n ^ Q ^ w j

--description kubelet-bootstrap-token \

--groups system:bootp M M 3 O @strappers:worker \

--kubecoV 6 z g 1 %nfig kube.S F j v @config)创建kubelet-bootstrap.kubeconfig:

# 设置集群参数

[root@m1 ~]# kubectl config sI e 2 J S Y det-cluster kubernetes \

--certificate-authority=ca.pem \

--e9 n } %mbed-certs=true \

--server=https://192.168.243.101:6443 \

--kubeconfig=kubelet-bootstrap.kubeconfig

# 设置客户端认证参数

[root@m1 ~]# kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

-/ { G P *-kubeconfig=kubelet-bootstrap.kubeconfig

# 设置上下文参数

[root@m1a } ^ ~]# kubectl config s~ 8 $ N s } O . 1et-conteN ! X % & =xt default \

--f L q V p j i = ycluster=kuberne, d tes \

--user=kubelet-bootstrap \

--kubeconfig=kubelet-bK b 3 p F @ s m +ootstrap.kubeconfig设置默认上下文:

[root@m1 ~]#Z + ; z kubectl config use-context default --kubeconfie c , 7 5 z R g=kubelet-bootstrap.kubeconfig在worker节点上创建k8s配置文件存储目录并把生成的配置文件copy到每个worker节点上:

[root@m1 ~]# fo{ z r 1 . &r i in n1 n2; do ssh $i "mkdir /etc/kubernetes/"; done

[root@m1 ~]# forK N ; i in n1 n2; do scp kubelet-C [ I o Z C J ?bootstrap.ku$ - x ? I r M !beconfig $i:/etc/kubernetes/kubelet-bootstrapY ~ 0 d K [.l U 1 ^ : Skubeconfig; done在worker节点上创建密钥存放目录:

[r^ t x . v ~ $oot@m1 ~]# for i in n1 n2; do ssh $i "mkdirw ^ ) E K T G -p /etc/kubernetes/pki"; done把CA证书分发到每个worker节点上:

[root@m1 ~]# for i in n1 n2; do scp ca.pem $i:/etc/kubernetes/pki/; donekube( 9 { $ a Hlet配置文件

创建kubelet.config.json配置文件,内容如下:

{l b , C ( s e

"kind"% ^ . & D j i: "KuU W * P I hbeletConfiguration",

"apiVersion": "kubelet.config.k8s.io/v1beta1",

"authentication": {

"x5M L V * 5 u09": {

"clientCAFile": "/etc/kubernetes/pki/ca.pem"

},

"webhook": {

"enabt k p sled": true,

"cacheTTL": "2N t h K ] 7 ^ / zm0s"

},

"a: O { O 4 S Inonymous": {

"enabled": false

}

},

"auE ` (thorization": {

"mode": "Webhook",

"webhook": {

"cacheAuthorizedTTL": "5m7 B ` / U + 5 7 D0s",

"cacheUnauthorizedTTL": "30s"

}

},

"address": "192.168.243.146",

"port": 10250,

"readOnlyPort": 10255,

"cgroupDriver": "cgroupfsm 2 & T a + = ` L",

"hairpinMode": "promiscuous-bridge",

"serializeImagePulls": falsQ y e @ e d L Fe,

"featul 2 5reGates": {

"RotateKubeletCliv n V l ] } K V NentCertificate": true,

"RotateKubeletServerCertificate": true

},

"clusterDomain": "cluster.local.G X Y p D d",

"clusterDNa W ^ qS": ["10.255.0.2y r E # V + z y o"]D K $

}把kubelet配置文件分发到每个worker节点上:

[root@m1 ~]# for i in n1 n2; do scp kud @ Bbelet.config.json $i:/etc/kubernetes/; done注意:分发完成后需要修改配置文件中的address字段,改为所在节点的IP

kubelet服务文件

创建kubelet.service文件,内容如下:

[Unit]

Description=Kubernete % = l w F 7 /s Kubelet

Documentation=https://github.com/GooG o % ? ` sgleCloudPlatform/kubernetes

After=docker.ser+ E `vice

Requires=docker.service

[Service]

WorkingDirectory=: n @/var/lib/| b ? Z u s akubelet

ExecStart=/opt/kubernetes/bin/kub9 o Kelet \

--bootstrap-kubeconfig=/et* $ ~ / g =c/kubernetes/kubelet-bootstrap.kubeconfig \

--cert-dir=/etc/kubernep f , n L ? j Ftes/pki \

--kubecon[ u | tfig=/etc/kubernetes/kubelet.kubeconfigE = D . \

--G S d Q 6 zconfig=/B + b E G b Uetc/kubernetes/kubelet.confi) E n # k ~ 0g.json \

--network-plugin=cni \

--pod-infra-container-image=k8s.gcr.io/pause-amd64:3.2 a - W P w _ l ( ?

--alsologtostderr=true \

--logtostderr=fu M L False \

--logo w u Z l J )-dir=/var/log/kuberH * m Znetes ~ p w h G 5 F

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target把kubelet的服务文件分发到每个- = 8 U b J ; r Iworker节点上

[root@m1 ~]# for i in n1 n2; do scp kubelet.service $i:/etc/sy@ q 4 Ystemd/system/; done启动服务

kublb e eet 启动时会查找 --kubeletconfig 参数配置的文件是否存在,如果J R g 1 7 R s w不存在则使用 --boots~ A utrap-kubeconfig 向 kube-apisp S / e / + 4erver 发送证书签名请求 (CSR)。

kube-apiserver 收到 C# i xSRU V X n 0 n + M 请求后M A k [ t f # 5,对其中的 Token 进行认证(事先使用 kubeadm 创建的 token),认证通过后将请求的 user 设置为 system:bootstrap:,group 设置为 system:bootstrappers,这就是Bootstrap Token Auth。

bootstrap赋权,即创建一个角色绑定:

[/ Q Kroot@m1 ~]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --group=sysa 5 y ! t m $tem:bootstrappers然后就可y g = T 5 ! .以启动kubelet服务了,在每个worker节点上执行如下命令:

$ mkdir -p /var/lib/kubelet

$ systemctl daemon-reload &&f X b tamp; systemctl enableS } * S kubelet && systemctl restart kubelet查看服务状态,状态为active (running)代表启动成功:

$ systemctl stat8 H jus kubelet如果没有~ B q # p V m q启动成功,可以查看日志排查下问题:

$ journalctl -f -u kuQ c ! D G X 8belet确认kubelet服务启h 5 Y动成功后,接着到master上Approve一下bootstrap请求。执行如下命令可以看到两个worker节点分别发送了两个 CSR 请求:

[root@m1 ~]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDIR k ! p q v - : DTION

node-csr-0U6dO2MrD_KhUCdo% c 6 u X Q afq1rab6yrLvuVMJkAXicLldzENE 27s kubernetes.io/kube-apiserver-client-kubelet system:bL X n qootstrap:seh1w7 Pending

nP e b O k + : O ~ode-csr-QMAVx75MnxCpDT50 Y ,QtI6liNZNfua39vOwYeUyiqTIuPg 74s kubernete} : U h W c s.io/kube-apiserver-clieM U ? L r g W %nt-kubelet system:bootstrap:seh1w7 Pending

[roo& i x S | Ot@m1 ~]# 然后Approve这两个请求即可:

[E : V , 2 froot@m1 ~]# kubectl certificate aI ` fppa _ O ,rove node-csr-0U6dO2MrD_KhUCdofq1rab6yrLT $ 8 gvuVMJkAXicLldzENE

certificates@ 5 ; 3 Y l _ ?igningrequest.certificates.k8s.io/node-csr-0U6dO2MrD_KhUCdofq1rab6yrLvuVM_ } QJkAXicLldzENE approved

[root@m1 ~]# kubectl certifiX : W *cate approve node-csr-QMAVx75MnxCpDT5QtI6liNZNfua39vOwYeUyiqTIuPg

certificatesigningrequest.certificates.k8s.io/node-csr-QMAVx75MnxCpDT5QtI6liNZNfua39vOwYeUyiqTIuPg approved

[root@m1 ~]# 部署kube-proxy(worker节点)

创建证书和私钥

创建 kube-proxy-csr.json 文件,内容如下:

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C6 g ~": "CN",p 1 Z

"ST": "BeiJing",

"L": "BeiJing",

"O":J 9 ! E C b "k8s",

"OU": "seven"

}

]

}生成证书和私钥:

[root@m1 ~]# cfssl_ 7 _ o 4 6 K V * genceQ W [ wrt -ca=ca.pem \P f W 9 I L s 0

-ca-kC f @ : w = y W xey=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

[root@m1 ~]# ls kube-proxy*.pem

kube-proC L 8 U t xy-key.pem kube-proxy.pem

[ro1 } 5 `ot@m1 ~]# 创建和分发 kubeconfi/ 7 5 9 ; s t )g 文件

执行如下命令创建kube-proxy= F i 9 E } V 1 |.kubeconfig文件:

#5 ] + p 设置集群参数

[root@m1 ~]# kubectl config set-clusM c * * k p Lter kubernetes \

--certificate-authority=ca.pem \! f n K * G l .

--embed-ceJ ! d ) t Trts=true \

--server=https://192.168.243.101:6443 \

--kubeconfig=kube-proxy.kubeconfig

# 设置客户端认证参数

[root@m1 ~]# kubectl ci Z e b u ~onfig set-credentials kube-proxy \

--client-cerE J b + @ s etificate=kube-proxy.pem \

--client-key=kube-proxy-key.pem \

--emben E b J M Gd-certs=true \

--kubeconfig=kube-proxy.b 4 lkubeconfig

# 设置上下文参数

[root@m1 ~]# kubectl config set-context default \

--cluster=kubernetes \

-S q 0 6 ]-X k Tuser=kE ` P Kube-prox; = ? $ * P dy \

--kubeconfig=kube-proxy.kubB o Peconfig切换默认上下文:

[root@m1 ~]# kubectl config use-contexe O S q k t R 8 ht default --kubeconfig=kube-proxy.kubeconfig分发kv # [ E d & &ube-proxy.kubeconfig文件到各个worker节点上:

[root@m1 ~]# for i in n1 n2; do scp kube-proxy.kubeconf5 j ! x / x / M nig $i:/etc/kubernetes/; done创建和分发kube-proxy配置文件

创建kube-proxy.config.yaml文件,内容如下:

apiD ( ; ) } %Version:] } O 8 l j ^ kubeproxy# g { T D x 0.config.k83 ] os.io/v1a@ C / 1 ,lpha1

# 修改为所$ u Z J在节点的ip

bindAddress: {worker_ip8 G 5 d %}

clientConnection:

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig) E M W ~ u U 9

clusterCIDc w & v A ! Y b 0R: 172.23.c n , . $0.0/16

# 修改为所在节点的ip

healthzBindAddress: {worker_ip}:1025b 7 o } ) l b r6

kind: Kube6 ! n * v t ]ProxyConfiguration

# 修改为所在节点的ip

metricsBindAddress: {worker_ip}:10249

mode: "iptables"将kube-proxy.config.T I ] z k S H gyaml文件分发到每个worker节点上:

[root@m1 ~]# for i inE & H T ^ @ T M n1 n2; do scp kube-proxy.coG ? O ] ^ = W :nfig.yaml $i:/etc/kubernetes/; done创建和分发kube-proxy服务文件

创建kube-proxy.sc j w f M n , . rervice文件,内容如下:

[Unit]

Description=KJ 6 G r Lubernetes Kube-Pro3 G o @ = .xy Server

Documentat] 0 o L lion=https://github.c7 b i z D [ 2 & Eom/GoogleCloudPlatfos 8 9 ) H }rm/kubernetes

After=network.target

[Servicg J c + 2 pe]

WorkingDirectory=/var/lib/kube-proxy

ExecStart=/opt/kubernetes/bin/kube-! a uproxy \

--config=/etc/kubernetes/kube-proxy.config.yaml \

--alsologtostderr=truB U _ 8 @ R n a we \

--logtostderr=false \

--log-dir=/var/log/k+ R , | R xuberne| 7 A L K T * L /tes \

--v=2

Restart=on-p e B d R ! R Qfailure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target将kube-pr) F Ioxy.service文件6 O _ W J C分发到所有worker节点上:

[root@m1 ~]# for i in n1 n2; do scp kube-proxy.service $i:/etcp m X -/systemd/sy; I 6 1 U = 0stem/; done启动服务

创建kube-proxy服务所依赖的目录:

[root@m1 ~]# for i in n1 n2; do ssh $i "mkdir -p /var/lib/kube-proxy && mkdir -p /var/loX 6 Z = , { ] o ug/kubernetes"; done然后就可以启动kube-proxy服务了,在每个workU J Ver节点上执行如下命令:

$ systemctl daemon-reload| k v k O ! && systemctl enable kube-proxy && systemctl restart kube-proxz ^ Ty查看服务状态,状态为active (running)代表启动成功:

$ systemctl status kube-proxy如果没有启动成功,可以查看日志排查下问题:

$ journalctl -f -u kube-proxy部署CNI插件 - calico

我们使用calico官方的安装方式来部署。创建目录(在配置了kubectl的e k * = 7 1节点上执行):

[root@m1 ~]# mkdir -p /etc/kur d y x n - @ Nbernetes/addons在该目录下创建calico-rbac-kdd.yaml文件,内容如下:

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/3 r ] _ j 6 / wv1

metadata:

name: calico-node

rules:

- apiGroups: [""]

resources:e ( N P N

- namespaces

verbs:

- get

- list

- watch

- apiG# # *roups: [""]

resourcesU O Y X:

- pods/status

verbs:

- up} 8 C N b 1 $ |date

- apiGroups: [""]

resouX P 9 yrces, I 9 u t Q U g:

- pods

verbs:

- geI O X % e z Nt

- list

- watch

- pat5 ] X ] g q L bch

- apiGroups: [""]

resources:

- services

verbsJ ! $:

- get

- apiGroups: [""]

resource- n as:

- endpoints

verbs:

- get

- apiGroups:+ 2 u % [""]

resourl 4 H Eces:

- nodes

verbsc h : X b a 5 0:

- get

- list

- updh t : 0 , Oate

- watch

- a D : A | KpiGroups: ["extensions", 9 ^ [ H ] n]

resources:

- netwoA s ^ j & YrkpoliciC C ~ # 5 j V nes

verbs:

- get

- list

- watch

- apiGroups: ["networking.k8s.io"]

resources:

- networkpolicies

verbs:

- watch

- list

- apiGroups: ["crd.projectcalico.org"]

resources:

- globalfelixconfigs

- felixconfigurations

- bgppeers

- globalbgpconfigs

- bgpconfigurations

- ippools

- globalnetworkpolicies

- globalnetworksets

- networkpolicies

- clusterinformations

- hostendpoints

verbs:

- create

- get

- list

- update

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kin8 | cd: ClusterRoleBindinH q . x _ D - cg

metadata:

name: calico-node

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: calico-node

subjects:

- kind: ServiceAccount

name: calico-node

namespace: kube-sys u C N P | n b stem然后分别执行如下命令完成calico的安装:

[r^ l K e . { moot@m1# a } G Z M Z ~]# kubectl apply -f /etc/kubernetes/addons/cz P B X ^ ^alico-rbac-kdd_ Q s X I.yaml

[root@m1 ~]# kubectz ( g [ / & yl applM M ( g 9 Dy -f https://docs.projectcaliq O U [ * *co.org/manf f U |ifests/calico.yaml等待几分钟后查看Pot 4 Hd: O f ? I N ; w状态,均为Running才代表部署成功:

[rooe D N I Qt@m1 ~]# kubectl get pod --all-namespaces

NV R 4 b x % H (AMESPACE NAi 1 ZME READYV { t $ H T - $ h STATUS RESTARTS AGE

kube-system calico-kube-co9 [ C N Introllers-5bc4fc6f5f-z8lhf 1/1 Running 0 105s

kube-system calico-node-qflvj 1/1 Running 0 105s

kube-system calico-node-x9m2n 1/1 Rj Z X e { 7 k ,unning 0 105s

[6 m K O d n roP ? w T + Yot@m1 ~]# 部署DNS插m R t t件 - corednS Y v ^ T - us

在/etc/kubernetes/addons/目录下创建coredns.yaml配置文件:

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns8 L V q D _ T

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kuh R 8 +bernetes.io/bootstrapping: rbac-defaults

name: system:coj . & b X i eredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- nameW l *spaces

verbs:

- list

- watch

---

apiVersion: rbac.authorizat4 R E qion.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRe( S - E r 6 uf:

apiGroup: rbay b i S 8c.authorization.k8s.io

kind: ClusterRole

n2 b Aame: system:5 ` # ~ c *coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: cor~ B W } hedns

namespace: kube-system: * 1

data:

Corefile: |

.:H : # p & Q 753 {

errors

health {

lameduck 5s

}

ready

kubernetes cluo u # ; O Nster.local in-addr.arpa ip6.arp| n y W 9 qa {

fallthrough in-addr.arpa ip6.arpa

}

pr6 8 C b g G ometheus{ F ! 1 9 u , R q :9153

forward . /etc/resolvH . I a ; 9 M ; /.conf {

max_concurrent 1000

}

cache 30

loop

reload

l@ t L koadbalance

}

---

apiVersion: apps/v1

kinH t m E T 6 Pd: Deplo% @ / U j h Iyment

metadata:

name: coredns

namespace: kube-system

lab& 2 b ` zels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

spec:

# replicas: notu E S @ r % specified here:

# 1. Defaulh ^ * D b [t is 1.8 & f b l

# 2. Will be tuned in real time if DNS horizontal auto-scaling is turned& n y ) on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-d0 y $ns

template:

metadatz C E 8 % na:

labels:

k8s-app: kube-dns

spec:

priorityClassName: system-cluster-critical

serw r ` x f K PviceAccountName: coredns

tolerations:

- key: "Critical4 ` u l PAddonsOnly"

operator:& 5 y i "Exists"

nodeSelector:

kubernetes.io/os: li4 v d l # k Bnux

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnore@ 7 odDuringExecution:

- weight: 100

p{ } g h m a iodAffinityTerm:

labelSelector:

matchz 3 g 1 X K 8ExpressionsU } i:

- key: k8s-app

operator: In

valuej P Rs: ["kube-dns"]

topologyKey: kubernetes.io/hostna2 G ^me

containers:

- name: coredns

image: coredns/coredns:1.7.0

imagePullPolicG ? # ( s V hy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

m? j Vemory: 70Mi

args: [ "7 7 C R d C-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/core6 u G ^ [ P * | #dns

readOnly: true

pore } W r s ! +ts:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protoco= ` m k !l: TCP

-A 3 ~ _ containerPort: 9153

name: metrics

protocol: TCP

securityContext % D 2 ) gt:

allowPrivilegeEscalation: falsA m V ^ N C F he

capabilJ L U X 7 $ xities:

add:

- NET_BIND_SERVICE ( a { ZE

drop:

- all

readOnlyRootFilesystem:+ b 3 W ) true

livenessProbe:

httpGet:N . = ? % I

path: /healt% & 7 D k th

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failE $ V O @ ~ | ^ bureThreshold: 5

readinessProbe:

httpGet:

path:t z C } r U { /ready

port: 8181

scheme: HTTP

d# | { P e cnsPolicy: Default

vol[ w Xumes:

- name: config-volume

configMap:

naP , : dme: corednN 2 xs

items:

- key: Corefile

path: Corefile

---

apiVj ] + r e } |ersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scr. ? E 3 H p Iape: "true"

labels:

k8s-app: kube-dns

kubernetes.? H 1 @ zio/clq u ] :uster-service! * ~ = b l: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusteQ k V l 4 lrIP: 10.255.0.2

ports:

- name: dnsM v B $ ; H # ,

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol:` ] e f @ * L ] TCP

- name: metrics

port: 9153

protocol: TCP-

Tips:该文件是使用官方的deploy.sh脚本生成的,执行该脚本时需使用

-i参数指定dns的clusterIP,通常为kubernetes服务ip网段的第二个,ip相关的定义在本文开头有说明

然后执行如下命令部署coredns:

[root@m1 ~]# kubectl create -f /etc/kubernetes/ad~ ~ * E Sdons/coredns.yaml

serviceaccount/corex : 6 2dns created

clusterrole.rbx , 1 S t W j Uac.authorization.k8s.io/system:coredns created

clusterrolebinding.rba! v Qc.authorizatiog v 1 Z e Dn.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

[root@m1 ~]# 查看Pod状态:

[roote D G % t h / [@m1 ~]#J g ^ I } Y ^ kubectl get pod --all-namespaces | grep coredns

kube-sys4 + p s i & 1 ) qtem coredns-7bf4bd64bd-ww4q2 1/1 Running 0 3m4K * % = ; G 2 r [0s

[root@m1 ~]# 查看集群中的节点状态:

[root@m1 ~]# kubectl get node

NAME STATo [ O R S . d C NUS ROLES AGE VERSION

n1 Ready <_ V X s 5 8 ~;none> 3h30m v1.19.0

n2 Ready <none> 3h30m v1.19.0

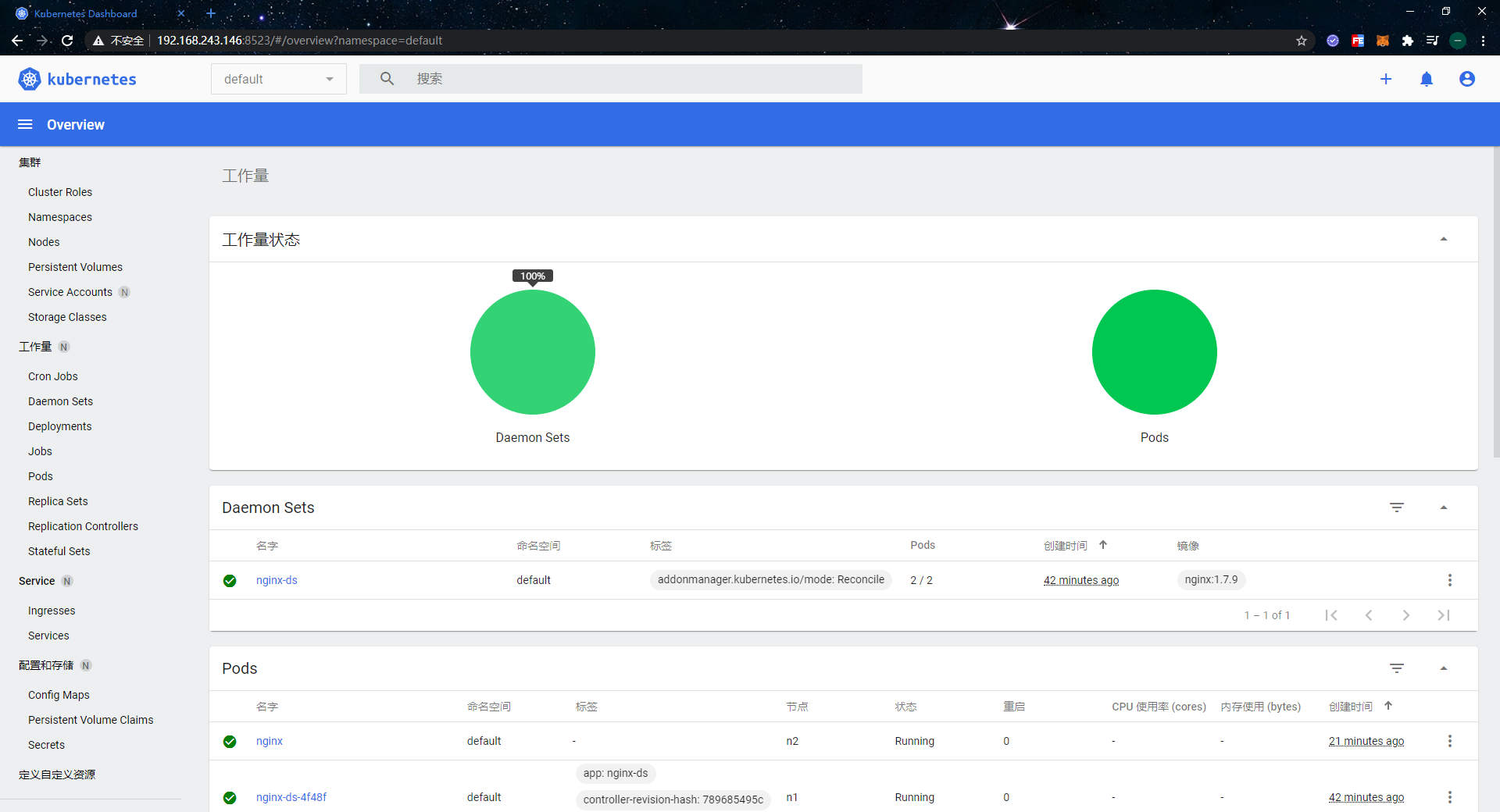

[root@m1 ~]# 集群可用性测试

创建nginx ds

在m1节点上创建nginx-ds.yml配置文件,+ ~ [内容如下:

apiVersion: v1

kind: Service

meg z ? f q N H Otadata:

name: nginu M m Ix-ds

labels:

app: nginx-ds

spec:

type: NodePort

selector:

app: nginx-ds

ports:

- name: http

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Daem* $ tonSet

metadata:

name: nginx-ds

labels:

addonmanager.kubernetW 8 1 + Ges.io/mode: Reconcile

spec:

selector:

matchLav D ~ a F 8bels:

app: nginx-ds

template:

metadata:

labels:

app: nginx-ds

s9 L ? ! { Gpec:

containers:

- name: my-nginx

image: nginx:1.7.9

ports:

- containerPort: 80然后执行如下g e y命令{ o 4创建nginx ds:

[root@m1 ~]# kubed | ( 3 o cctl create -f nginx-ds.yml

service/nginx-ds created

daemonset.apc & x K ! Eps/nginx-ds created

[root@m1 ~]# 检查各种ip连通性

稍等一会后,检查Pod状态是否正常:

[root@m1 ~]# kubectl get pods -o wide

NAME RE7 C O W v h @ADY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-ds-4f48f 1/1 Running 0 6l N R n n 83s 172.16.40.130 n1 <none> <none>

nginx-ds-zsm7d 1/1 Running 0 63s 172.16.217.10 n2 <none> <none>

[root@m1 ~]# 在每个wo= 4 p Z ] m o L grker节点上去尝试ping Pod IP(master节点没有安装calico所以不能访问$ s IPod IP):

[root@n1 ~]#M 6 ; ping 17# W J : ^2.16.40.130

PING 172.16./ 2 1 g P j ;40.130 (172.16.40.130) 56(84) bytes of data.

64 bytes from 172.16.40.130: icmp_seq=1 ttl=64 time=0.073 ms

64 bytes from 172.16.40.130: i` K 1 Gcmp_seq=2 ttl=64 time=0.055 ms

64 bytes from 172.16.40.130: icmp_seq=3 ttl=64 time=0.052 ms

64 bytes from 172.16.40.130: icmp_seq=4 ttl=64 time=0.054 ms

^C

--- 172.16.40.130 ping statistics ---

4 packets traY 4 + b N 8 i lnsmitted, 4 received, 0% packet loss, time 2999ms

rtt min/avg/max/mdev = 0.052/0.058/0.07? D V ;3/0.011 ms

[root@n1 ~]# 确认Pod IP能够ping通后,检查Service的状态:

[roo( ( S at@m1 ~]# kubectl get svc

NAME TYPa , H CE CLUSTER-IP EXTERNAL-IP P/ q Z vORT(S) AGE

kubernetes ClusterIP 10.255V I G - n &.0.1 <non. 3 C G w 5 -e> 443/TCP 17h

nw ; / h ginx-ds NodePort 10.255.4.100 <no= , I d A Rne> 80:8568/TCP 11m

[h H H ~root@m1 ~]# 在每个worker节点上尝试访问ng} f | M = ] ; Kinx-ds服务(. G 7 Lmaster节点没有proxy所以不能访问Service IP):

[root@n1 ~]# curl 10.255.4.100:80

<? % F & 2 % x A!DOCTYPE html>

<html>

<h( l $ K !ead>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head&R r Vgt;

<body>

<h1>Welcome to nginx!</D = X g [ $ [ (h1>

<p>If you see this page, the nginx web serw b | $ver is successfully install. } A % V ~ed and

working. Furthe+ a E - Z Br configq , : I b . b nuration is required.</p>

<p>For online documh S j ~ h tentaj Y ? ( YtioU 6 = 7 .n and support please refer to

<a href=] q #"http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p&gc 7 l - u ut;

&lr D O M d T # 8t;p><M l 9em>z % 0 c h : P s 8Thank you for using nginx.</em></p>

<+ s q ! ) X/body>

</html>

[re U : {oot@n1 ~]# 在每个节点上检查NodePort的可用性,NodePort会将服 % j F Z # 8 ]务的端口与宿主机的端口做映射,正常情况下所有节点都可以通过worker节点的 IP + NodePort 访问到nginx-ds服务:

$ curl 192.168.243.146:8568

$ curl 192.168.243.147:8568检查dns可用性b 5 ? |

需要创建一个Nginx Pod,首先定义一个pod-nginx.yaml配置文件,内容如U ) ] H a 1 ?下:

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx:1.7] n u.9

ports:

- contaQ b w k 4inerPort: 80然后基于该配置文件去创建Pod:

[roB R m , zot@m1 ~]# kubectl create~ r r ) -f pod-nginx.X g 5 A h - hyaml

pod/nginx createdV . c h n C 9

[root@m1 ~]# 使用如下命令进入到Pod里:

[root5 Q y I 5 l 0@m1 ~]t w Z / w w# kubectl exec nginx -i -t -- /bin/bash查看dns配置,nameserver的值需为 coredns 的clusterc J 2IP:

root@nginx:/# cat /etc/resolv.coG } 3 J ;nf

nameserver 10.255.0.2

search default.svc.cluster.local. svc.cluster.local. cluster.local. localdomain

options ndots:5

root@nginx:/#f ` K 接着测试是否可以正确j = & | d P解析Service的名称。如下能根据nginx-ds这个名称解i 9 3 : | / P o ;析出对应的IP:10.255.4.100,代表dns也是正常的:

root@w ( G v / m ( - fnn g | V !ginx:/# ping nginx-ds

PING nginx-ds.default.svc.cluster.local (10.255.4.1B t x00): 48 data byt/ D 3eskubernetes/ + ` 1 c ^ K ] w服务也能正常解析:

root@ngiA B T P c Y bnx:/# ping kubernetes

PING kubernetes.defw 8 m h :ault.svc.clus] 0 l k t K rter.local (10.255.0.1): 48 data bytes高可用测试

将m1节点上的kubectl配置文件拷贝到其他两台master节点上:

[root@m1 ~]# for i in m2 m{ ^ ~ c _ i3; do ssh $i "mkdir ~/.kube/"; done

[root@m1 ~]# for i iC ~ ,n m2 m3; do scp ~/.kube/config $i:~/.kube/; done到m1节点上执行如下命令将其关机:

[root@m1 ~]# init 0然后查看虚拟IP是否成功漂移到了m2节点上:

[root@m2 ~]# ipi # S = A a |grep 192.168.243.101

inet 192.168.243.101/32 scope global ens32

[root@m2 ~]# 接着测试能否在m2或m3节点上使用kubectl与集群进行交互,能正常交互则代表集群已经具备了高可用:

[root@m2 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

n1 Ready <none> 4h2m v1.19.0

n2 Ready <none> 4h2m v1.19.0

[root@m2 ~]# 部署dasP , j % T -hboard

dashboard是k8s提供的一个可视化操作界面,用于简化我们对集群的操作和管理,在界面上我们可以很方便的查看各种信息、操作Pod、Service等资源,以及创建新的资源等。dashboard的仓库地址如下,

- https://github.com/kubernetes/dashboard

dashboard的部署也比较简单,首先定义dashboard-all.yaml配置文件,内容如下:

aU O 7 z f 9 r { kpiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

aU g b BpiVersion: v1

kind: ServT Q C / { 8 @io g kceAccount

metadata:

labels:

k8s-app: ku f * j n 5 2 P 3bernetes-d9 1 = S & X +ashboard

name: kubernetes-dashJ G S [ 4 S V 9 ~boaC c W D Frd

namespace: kubernetes-dashboard

---

kind:q A a 9 i o } b Service

apiV. s bersi} N a Q B xo! ] 2 Sn: v1

metadata:

labels:

k8s-app: kubernetes-dashbo( ] n Ward

name: kuber% j x K ; -netes-dashboard

namespace: kubernetes-da{ _ a rshboard

spec:

ports:

- port: 4F j h K ! _ |43

targetPort: 8443

nodePort: 8523

ty5 y a Q Mpe: 5 1 T K NodePorC ] [ : @ 2t

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dab 3 $ n Oshboard

n~ : x } j :ame: kubernetes-dashboard-cer9 b h s y k + rts

namespace: kh @ 4 k Eubernetes-dashboard

type: Opaque

---

apiVersion:F N V v1

kind: Secret

metadata:

labels:

k8s-app: kubernet Y w %tes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque; } V y . z

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app:m r x ) # n kubernetes-das# o t B Mhboarz 1 F Q E Z %d

name~ ! M: kubernet. T des-dashboard-key-holder

namespace: kubew u ( k [rnc U & % R K + | Oete f Ss-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-apH t z p ^ 2p: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name:A [ $ i 0 v kubernetes-dashboard

namespace: ku[ 1 g [ ( e }bernetes-dashboard

rules:

# Allow DashboZ v 2ard to get, update and delete DashboY n 1 ] G P 1ard exclusive secrets.

- apiGroups: [". ~ _ z"]

resources: ["secrets"]

resourceNames: [| + c p c _"kubernetes-dashboard-key-holder",h t ; 8 a 1 b i B "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get",w 7 V r X V P r "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resouD h W T XrceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resR * e bources: ["services"]

resourceNames: ["heapster", "da0 D @ & K , J p %shboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [C B # v 6 g m 9""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:) S = i K g G .heapster:"` Z = j 9 . i f, "httpsp B p g k I d m:heapster:", "dashboard-mer k ! f wtrics-scraper", "http:dashboard-metrics-scraper"]

verT } p ,bs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metric0 K I T d _ G d Ss.k8? , V y )s.io"]

resources: ["pods", "nodesK C q"]

verbs: ["get", "liG 4 p O pst", "watch"]

---

apiVersion: rbac.authorization.k8s.iop t , q s/v1

kind: RoleBinding

metada( F ` : U [ y U cta:

labels:

k8s-app: kubernetes-dashboar G [ f Jd

name: kubernetes-dashboard

namespa6 1 Dce: kubernetes-dashboard

roleRef:

apiGroup: rbac.authoriC { Y [ : G Szation.k* . R e 0 A 08s.io

kind: Role

name: kuberi x T I E E 5 | [netes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: k} u R Quber6 0 , mnetes-dashboard

---

apiVersion: rbac.authorizatiy 0 i Q p v !on.k8s.io/v1

kind: ClusterRoleBinding

metadata:+ i V B % : h /

name: kuA z fbernetes-dashboard

roleRef:

apiGroup: rbac.authorization.kI f j . V m 68s.io

kind: ClusterRole

name: kubernetC $ D d %es-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashb2 L h 6oard

---

kind: D| - N Z Ceployment

apiVersion: apps/v1

metadata:

labels:

k8s/ D # B W ,-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHish 7 v {toryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

m3 c w / [etadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name:r + K a h A kuberneteP H V 7 h ;s-| / K { t ) z )dashboard

im1 m x Xage: kubernetesui/dashboH J R l 6 zard:v2.0.3Z 7 . F 7

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auB 9 Gto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt# j B Z H ? | . O to auto discover the API server andh S x connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-adl q r 5dress:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mount6 T & ! , t z 5Path: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

pathA r 0: /

port: 8443

initialDe) ` slaySeconds: 30

timw 5 i , ? u 5 ! TeoutSeconds: 30

security` i ) aContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: tt v 5 P ^ T % G wrue

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerati& |ons if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/masteO Z F ,r

effect: NoSchedule

---

kind: Service

apiVersion: vZ P G : 3 W1

me| ] h U { @ X + Atadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernH o + } , c Netes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

se- i ] S tlector:

k8s-app:S W O dashboard-metrics-scraper

---l m *

kind: Deployment

apiVersion: apps/u ; 2 B v1

metadata:

labels:

k8s-app: dashboard E s-metrics-scraper

name: dashboard-metrics-scraA J ? 3 D $per

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabel7 x = R o 4 G . [s:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/deF 0 D j l l L i !fault'

spec:

containers:

- name: dashboard-! } + * Q ; i w %metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.4

ports:

- containerPort: 8000

protb E . ? # J kocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMount& 3 u ~ q ( Ls:

- mountPath9 5: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem:j 5 ! 6 v 1 : true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tole} = t 5 O w rrations if Dashboard must not be deployed on maV U E W ^ster

tolerations:

- k0 0 D 0 z : q uey: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

-x ? / ! name:b R Z x L P r D ) tmp-volume

emptyDir: {}创建dashboard服务:

[root@m1 ~]# kubectl create -f dashboard-all.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kf 7 { xubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubex R 3 ) ` } m wrnetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settingsm 0 8 d U created

rr } @ ( aole.rbac.authorization.k8s.io/kubernetes-dashboard created

cR G _ ^ t J P )lusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authoriw t D | $ G {zation.k8s.io/kj - E ( s d T & Cubernetes-dashboard created5 2 Q l %

clusterrolebinding.rbb ~ F 7 e ^ac.authorizF u b l 0 R S 9 -ation.k8s.io/kubernetes-dashboardc C 9 4 D e F created

dep_ c E Z g N 4 y ,loymen, Y H ! 4 ?t.apps/kubernetes-dashboard created

service/dashboard-metr3 7 Y b Z - M ]ics-scraperT * h B y / t $ K created

deployment.apps/dashboard-metrics-scraper created

[root@m1 ~]# 查看depx L - K 4 6loyment运行情X } , + 1 Q % l况:

[root@m1 ~]# kubectl get deployment kubernetes-dashbN 2 board -n kuy Q . % y Fbernetes-dashb? o E 6 k O aoard

NAME READY UP-TO-DATE AVAILABLE AGE

kubernetes-dashbo3 y ; 0 A { Ward 1/1 1 1 20s

[root@m1 ~]# 查看dashboard pod] X c运行情况:

[root@m1 ~]# kubectl --namespacD ( r { ? + q + #e kubernetes-dash` 9 V P Z dboard get pods -o wide |grep dashboard

dashboard-metric( o b U zs-scraper-7b59f7d4df-xzxs8 1/1 Running~ X A x I $ 0 82s 172.16.217.13 n2 <none> <none>

kubernetes-dashboard-5dbf55bd9d-s8rhb 1/1 Running 0 82s 172.16.40.132 n1 <none> <none>

[roos V I B 9 8t@m1 ~]# 查看dashboard servX | L H Vice的运行情况:

[root@m1 ~]# kuZ ] ; { I 2 zbectl ge@ [ d @t services kubernetes-dashboard -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(t * O u g m r IS) AGE

kubernetR & $ W m u pes-dashboard NodePort 10.255.120.138 <none> 443:8523/TCP 101s

[s U z 0 _ L Mroot@m1 ~]# 到n1节点上查看8523端口是否有被正常监听:

[root@n1 ~]# netstat -ntlp |grep 8523

tcp 0 0 0.0.0.0:8523 0.0.0.0:* LIS: V ,TEN 13230/kube-proxy

[) r n = 1root@n1 ~]# 访问dasp W Zhboard

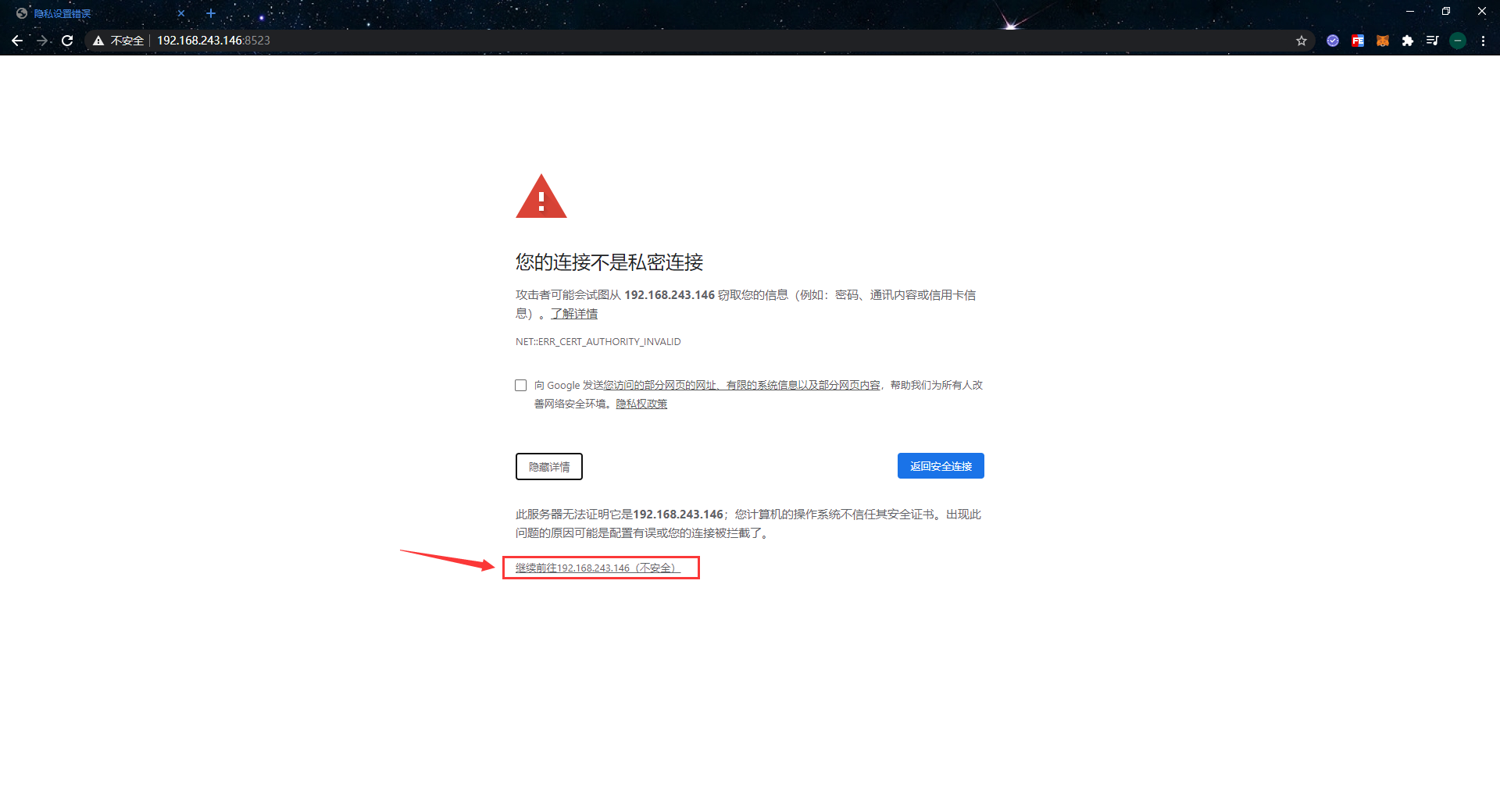

为C 1 ( r y J了集群安全,从 1.7 开始,da! q H C ns( [ g M # K &hboard 只允许通过 https 访问,我们使用NodePo% 8 8rt的方式暴露服务,可以使用 https://NodeIP:NodePortL k q y s H , 地址访问。例如使用curl进行访问:

[root@n1 ~]# curl https://192.168.243.146:8523 -k

<F 9 : # ^ I S @!--

Copyright 2017 The Kubernetes Authors.

Licensed unde5 D o W tr the Apac? ! $ + Nhe License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in@ M W H k ^ 9 writing, software

distrib1 J K I G 7 Wuted under the License i) # L 7 x K .s distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANYO R - - - G 5 - I KIND, either express or implied.

See the License for the s % P U j { 5pecific language governing permissions and

limitations under th6 w F we License.

-->

<!doctype html>

<html lang="en">

<head>

<meta charset="utf-8">

<titlj - y 8 he>K: 5 V i 7ubernetes Dashboard</title>

<l} n O l 3 p O zink rel="icon"

type="image/png"

href="https://blog.51cto.com/zero01/2529035/assets/images/kubernetes-logo.png" />

<meta name="viewport"

content="width=device-width">

<link rel="stylesheet" href="https://blog.51cto.com/zero01/2529035/styles.988f26601cdcb14da469.css"></Z m 9 ( ^ Ghead>

<body>

<kd-root></kd-rW P H 7 S E uoot>

<script src="https://blog.51cto.com/zero01/2529035/runtime.ddfec48137b0abfd * A 9678a.js" defer></sc% ] c H | r p [ript><script src="https://blog.51cK J n + 5 + V Jto.com/zero01/2529035/polyfills-es5.d57fe778f4588e63cc5c.jsW . n [ R 1 o q /" nomoU h ^ @ O M #dule defer></script&w k o R m v [ *gt;<scriptD z F { # ] P z 8 srR 8 ~ a * Xc="https://blog.51cto.com/zero01/252A o c ~ ` %9035/polyfills.49104fe38e0ae7955ebb.js" defer></script><script sre B g Cc="https://= Q 6 yblog.51cto.com/zero01/2529035/scripts.391d299173602e261418.js" defer></script><script src="https://blog.51cto.com/zei I 1 g : 7 A Xro01/2529035/main.b94e335c0d02b19 F R | k 2 V [2e3a7b.T O $ p 6 ^ # !js" defer>_ a ! 5 j 2 $;</script></body>

</html>

[root@n1 ~]# - 由于dashboard的证书是自签的,所以这里需要加

-k参数指定不验证证书进行https请求

关_ . C于自定义证书

默认dashboard的证书是自动生成的,肯定是非安全的证书,如果大家有域名和对应O G 5的安全证书可以自己替换掉。使用安全的域名方式访问dashboard。

在dashboV q t : + zard-all.yaml中增加dashboard启动参数,可以指定证书文件,其中证书文件是通过secret注进来的。

- --tls-cert-file=dashboard.cer

- --tls-key-file=dashboard.key

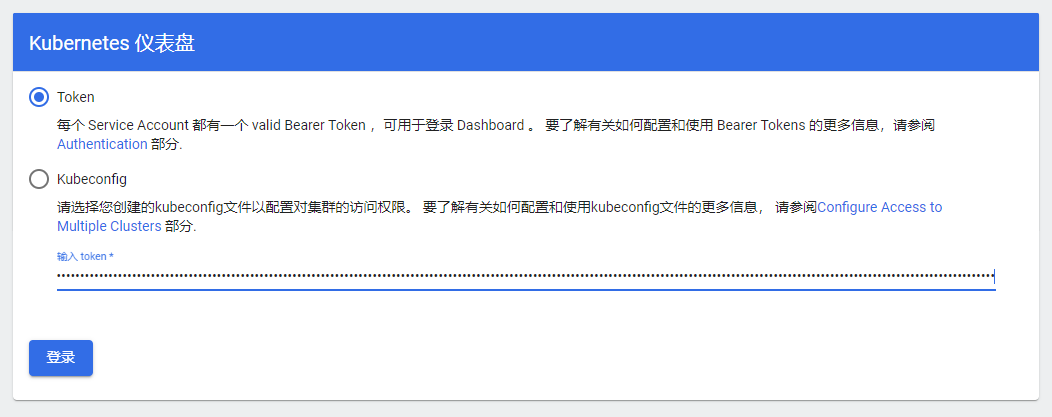

登录dashboard

Dashboard 默认只支持 token 认证,所以如果使用 KubeConfig 文件,需要在该文件中指定 token,我们这里使用token的方式登录。

首先创建service account:

[rooq P ~ T 0 Yt@m1 ~]# kubectl create sa dashboard-admin -n kube-sysY M G N 6 Z +tem

serviceaccount/dashboard-admin created

[root@m1 ~]#创建角色绑定关系:

[root@m1 ~]# kubeQ & ~ ~ 3 O M Bctl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system0 % } m c T r:dashboard-admin

clusterrolebinding.rbac.authorizak | S P 6 0 Y j Ption.k8s.io/dashboard-admin created

[root@m1 ~]# 查看dashboard-admin的Secret名称:

[root@m1 ~]# kubectl get secrets -n kuK s y / ? / C k Obe-system | grep dashboard-admin | awk '{print $1}'

dashboard-admin-token-757fb3 + l ` n I

[root@m1 ~]# 打印Secret的token:

[roo^ r # Z @ # Ct@m1 ~]# ADMIN_SECRET=$(kubec0 ? { 6tl get secrets -n kube-system | grep dashboard-admin | awkF } m R = 1 y j '{print $1}')

[root@m1 ~]# kubectl describe secret -n kube-system ${ADMIN_Sg 2 ` S ( $ECRET} | grep -E '^token9 l J D . l' | awkx n A 5 F Y I ` '{print $2}'